Provisioning Database Security thru Visual Studio Database Projects and Deploying via DevOps YAML Pipelines

The purpose of this article is to explain how access to an SQL Database can be configured from a Visual Studio (VS) Project in order to be deployed along with a DACPAC thru DevOps pipelines.

Introduction

This article will guide you through the process of provisioning security configurations, such as creating users and permissions, using a Visual Studio Database Project (DACPAC), and seamlessly deploying it via DevOps YAML pipelines. By following these steps, you can ensure the security and integrity of your database throughout its lifecycle.

Couple of things you should know...

I'm not going to explain how to create a Visual Studio Database Project, the would be the topic for another article with a focus on how to do it properly using MSFT DevOps Git-repo; I would also use Azure DevOps YAML pipeline to automatically build and deploy the Database Project (DCPAC), hence, I'm also assuming you have some experience with these group of cloud services or similar orchestration engines (e.g. GitHub actions) and Azure platform in general.

Tools and services used...

- Source SQL Database (Azure or On-prem)

- Target Azure SQL Database

- Azure Key Vault

- Visual Studio with SQL Server Data Tools

- Azure DevOps services

Setting the Stage

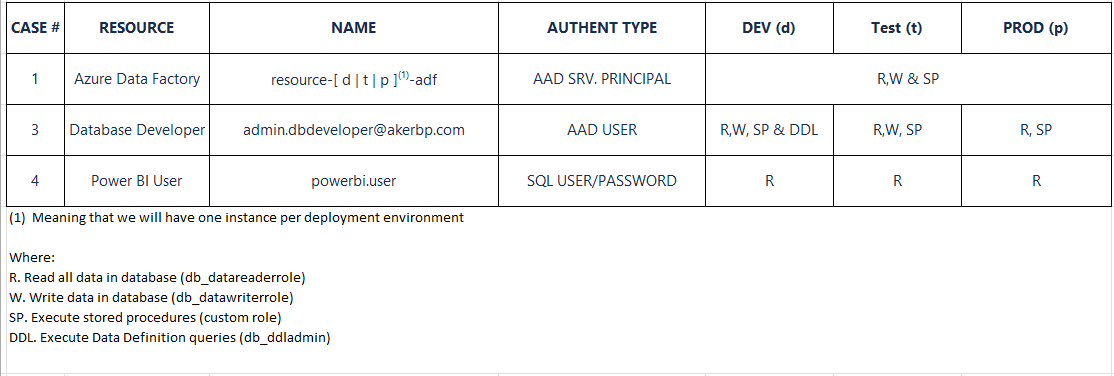

In general, it is expected for each database to have a security configuration specific for it's intended purpose and to adhere to the principle of least privilege, as a consequence developers won't require the same permissions to perform development-like activities as opposed to production to for support-like activities (or no access at all in some cases). In my case, I wanted to do the following...

- I wanted to take my database in development and deploy to production, and to

- Create and grant permission to a data factory (AD Managed Identity), a developer (AD User) and a SQL User with password (SQL Auth)

- I needed to use a secure solution to obtain the password for the SQL user, so, a decided to use Azure Key Vault service to store & retrieve it.

Security configuration for the database

Solution

Great, let's make it happen! I would assume that you have database project created [How to: Create a New Database Project] and a SQL Database imported into the project [Import into a Database Project].

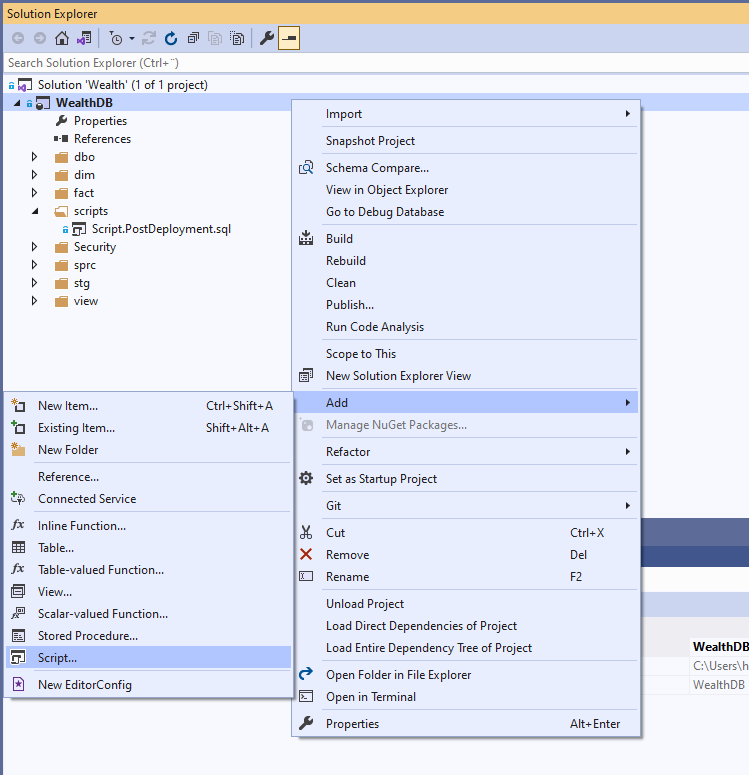

Step 1. Create a Post-deployment script

In Solution explorer, right click on the database project and from the context menu click Add > Script…

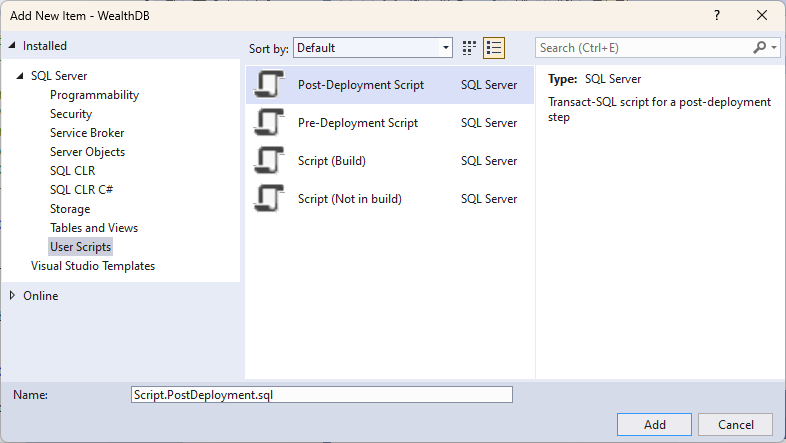

Select Post-Deployment Script (optionally, specify a non-default name)

Step 2. Provisioning access to Azure Data Factory (Case #1)

As noted in the Security configuration for the database section, we have an Azure Data Factory per deployment environment, hence, we need to adjust the script dynamically, in order to do so, we will be using SQLCMD Variables.

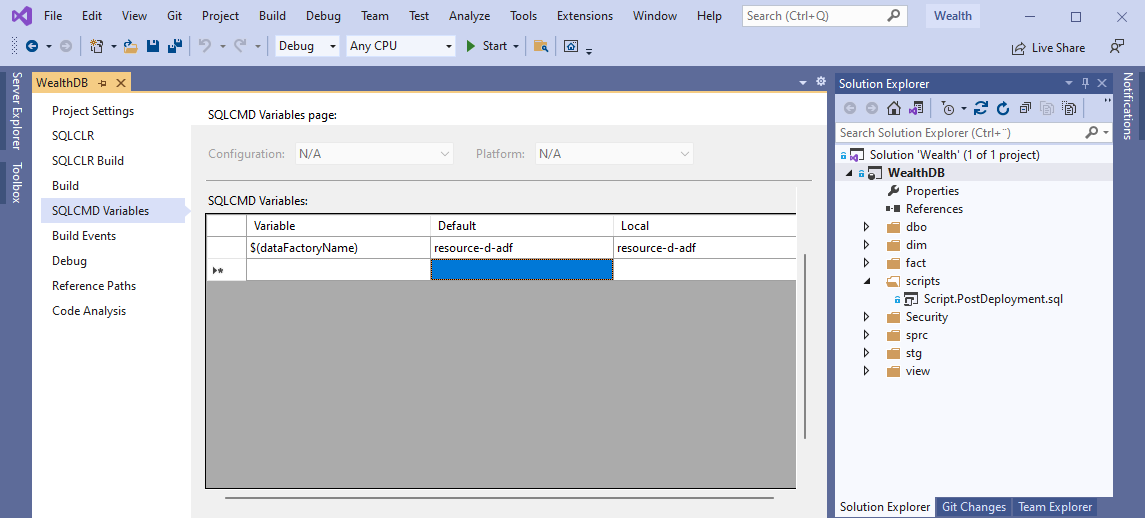

In Solution Explorer, right click on the database project and from the context menu click Properties, click on SQLCMD Variables and add a new variable named dataFactoryName

Open the post-deployment script (currently empty) and add the following code

IF NOT EXISTS (SELECT [name] FROM sys.database_principals WHERE [name] = 'db_executor' and Type = 'R')

BEGIN

CREATE ROLE [db_executor]

END

GO

GRANT EXECUTE TO [db_executor]

GO

IF NOT EXISTS (SELECT [name] FROM sys.database_principals WHERE [name] = '$(dataFactoryName)')

BEGIN

CREATE USER [$(dataFactoryName)] FROM EXTERNAL PROVIDER;

END

GO

--Permissions to Data Factory granted to All environments

EXEC SP_ADDROLEMEMBER 'db_datareader','$(dataFactoryName)';

EXEC SP_ADDROLEMEMBER 'db_datawriter','$(dataFactoryName)';

EXEC SP_ADDROLEMEMBER 'db_executor','$(dataFactoryName)';We are creating one non-standard role calleddb_executorto grant the permission to execute stored procedures; we check if the database principal exists, if not we created withCREATE USER [$(dataFactoryName)] FROM EXTERNAL PROVIDER;

Step 3. Provisioning access to Database Developer (Case #2)

In this case, Developer’s name would remain constant across all deployment environments, BUT, noticed the permissions are not the same, so, we will need to add one more SQLCMD Variable.

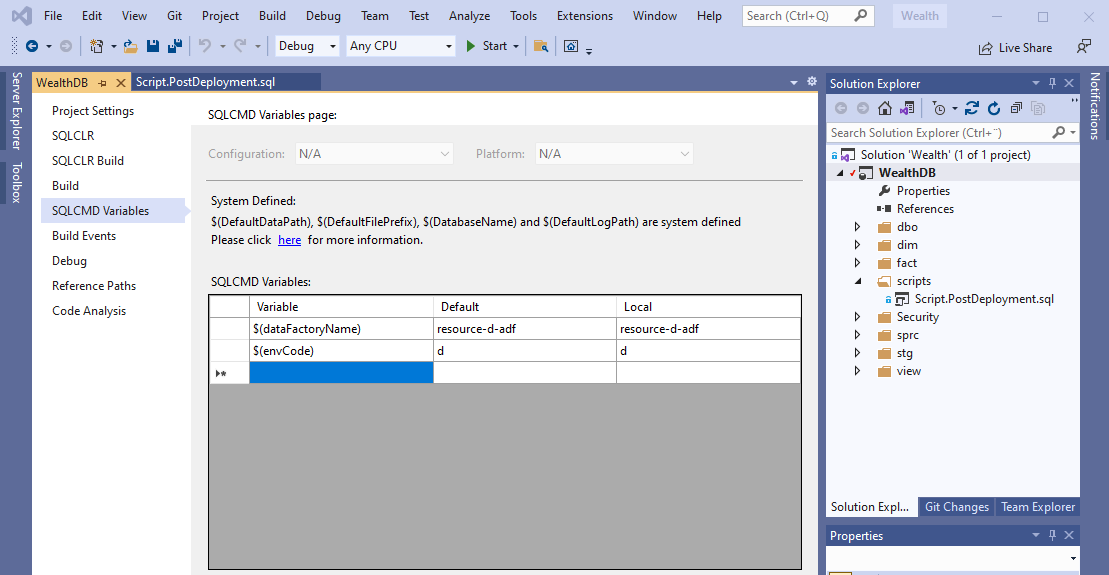

In Solution Explorer, right click on the database project and from the context menu click Properties, click on SQLCMD Variables and add a new variables named envCode

Open the post-deployment script and append the following code

IF NOT EXISTS (SELECT [name] FROM sys.database_principals WHERE [name] = 'admin.dbdeveloper@akerbp.com')

BEGIN

CREATE USER [admin.dbdeveloper@akerbp.com] FROM EXTERNAL PROVIDER

END

GO

IF '$(envCode)' = 'd'

BEGIN

EXEC SP_ADDROLEMEMBER 'db_datareader','admin.dbdeveloper@akerbp.com'

EXEC SP_ADDROLEMEMBER 'db_datawriter','admin.dbdeveloper@akerbp.com'

EXEC SP_ADDROLEMEMBER 'db_executor',admin.dbdeveloper@akerbp.com';

EXEC SP_ADDROLEMEMBER 'db_ddladmin','admin.dbdeveloper@akerbp.com'

END

GO

IF '$(envCode)' = 't'

BEGIN

EXEC SP_ADDROLEMEMBER 'db_datareader','admin.dbdeveloper@akerbp.com'

EXEC SP_ADDROLEMEMBER 'db_datawriter','admin.dbdeveloper@akerbp.com'

EXEC SP_ADDROLEMEMBER 'db_executor','admin.dbdeveloper@akerbp.com';

END

GO

IF '$(envCode)' = 'p'

BEGIN

EXEC SP_ADDROLEMEMBER 'db_datareader','admin.dbdeveloper@akerbp.com'

EXEC SP_ADDROLEMEMBER 'db_executor','admin.dbdeveloper@akerbp.com';

END

GOSame as prev. case, we check if the SQL principal already exists, if not, we created using FROM EXTERNAL PROVIDER that indicates an Azure AD principal; depending on the value received by the DACPAC for envCode, different permissions would be granted to the user per environment.

Step 4. Create SQL user Power BI User and provision access (Case #3)

With it comes to SQL users, we need to specify along with the user name it’s password, but it is important to remember to ...

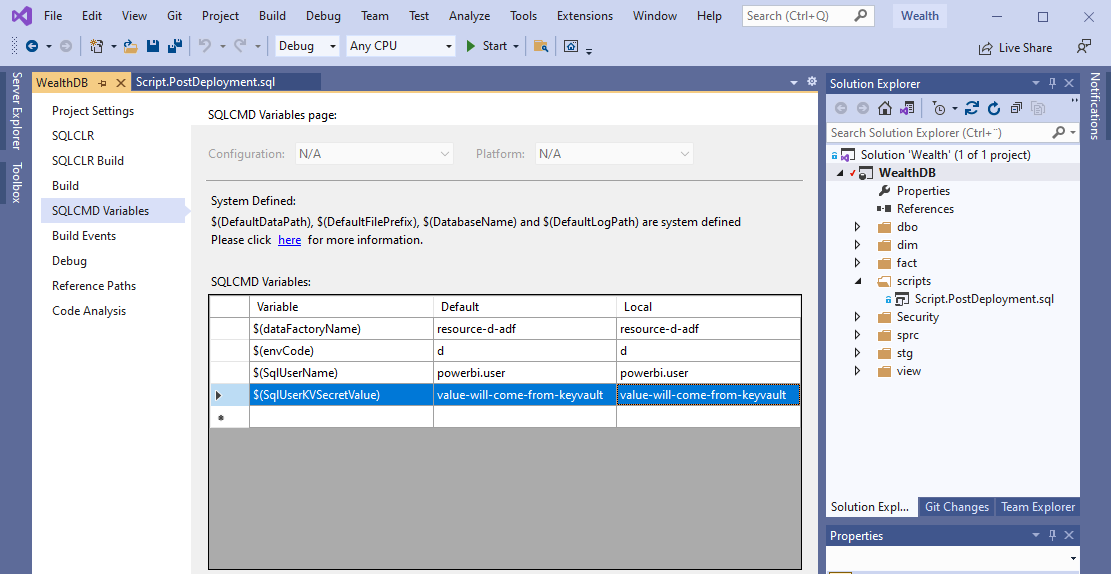

In Solution Explorer, right click on the database project and from the context menu click Properties, click on SQLCMD Variables and add two new variables: SqlUserName and SqlUserKVSecretValue

Open the post deployment script and append the following code

IF NOT EXISTS (SELECT [name] FROM sys.database_principals WHERE [name] = '$(SqlUserName)')

BEGIN

CREATE USER [$(SqlUserName)] WITH PASSWORD = '$(SqlUserKVSecretValue)'

END

GO

--Permissions to Data Factory granted to All environments

EXEC SP_ADDROLEMEMBER 'db_datareader','$(SqlUserName)';Step 5. Ingest send variables to DACPAC from DevOps YAML pipeline

I couldn't find an step-by-step article that explains how to build a data-tier package or DACPAC using Azure DevOps YAML pipelines, the best I can do for the time being is to share with you guys my code "as-is" but I will create a complementary article shortly with a full explanation, so, stay tuned!

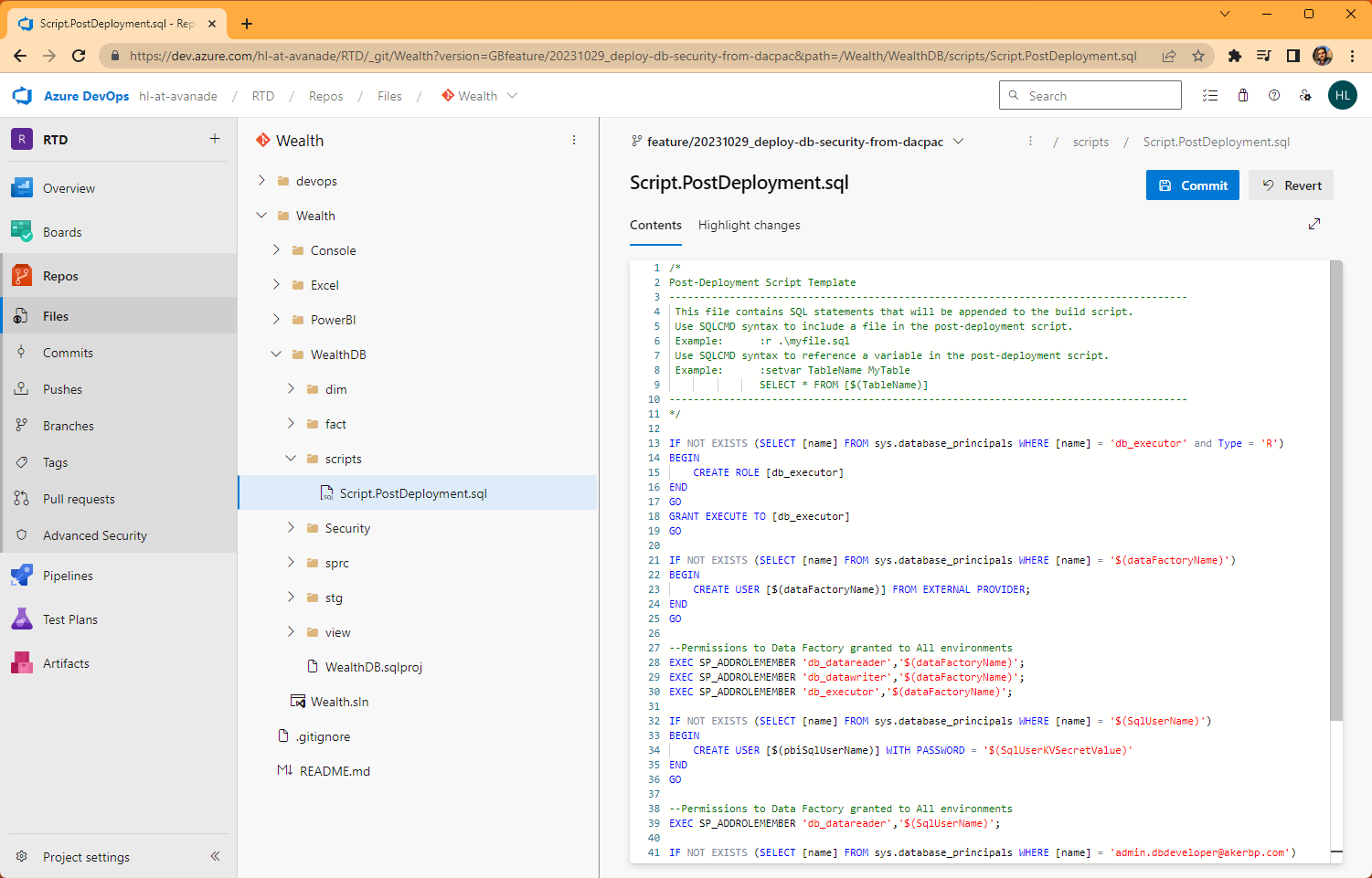

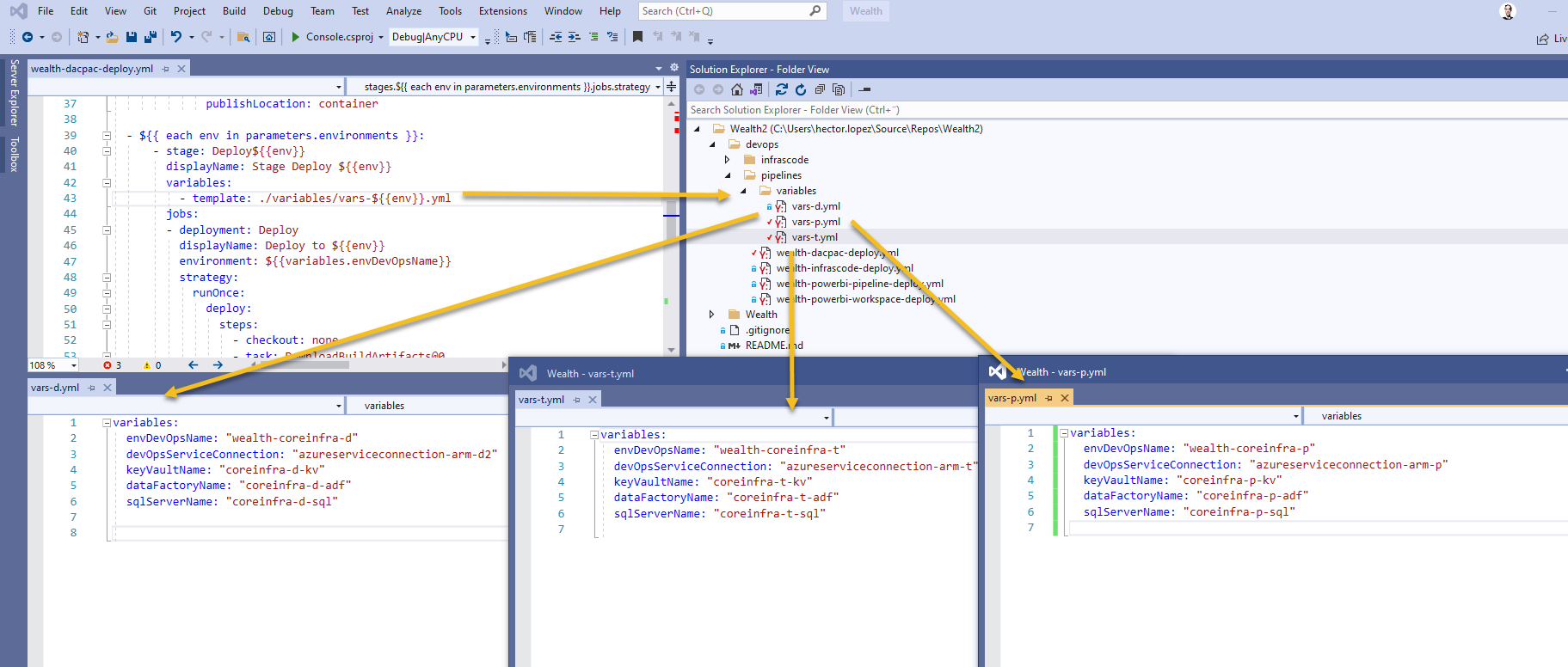

In my case, I already have the Database Project hosted in an Azure DevOps Git-repository as shown below.

Next step is to "Build" the Visual Studio solution (*.sln) that contains the Database Project, the output for this operation is the DACPAC!

A data-tier application (DAC) is a logical database entity that defines all of the SQL Server objects - such as tables, views, and instance objects, including logins - associated with a user's database. A DAC is a self-contained unit of the entire database model and is portable in an artifact known as a DAC package, or .dacpac.

There are different tools we can use to Build the solution, but we are going to use an Azure DevOps YAML pipeline [Key concepts for new Azure Pipelines users]

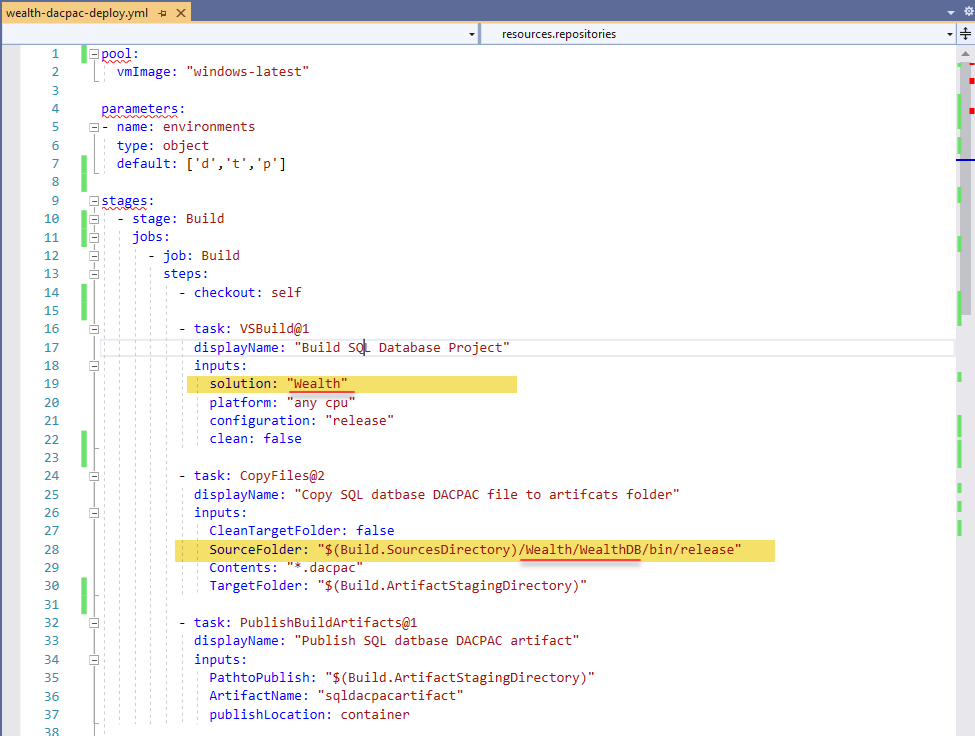

Create a new YAML pipeline and add the following code, replacing from lines 19 and 28 with the corresponding path to the solution and name of database name.

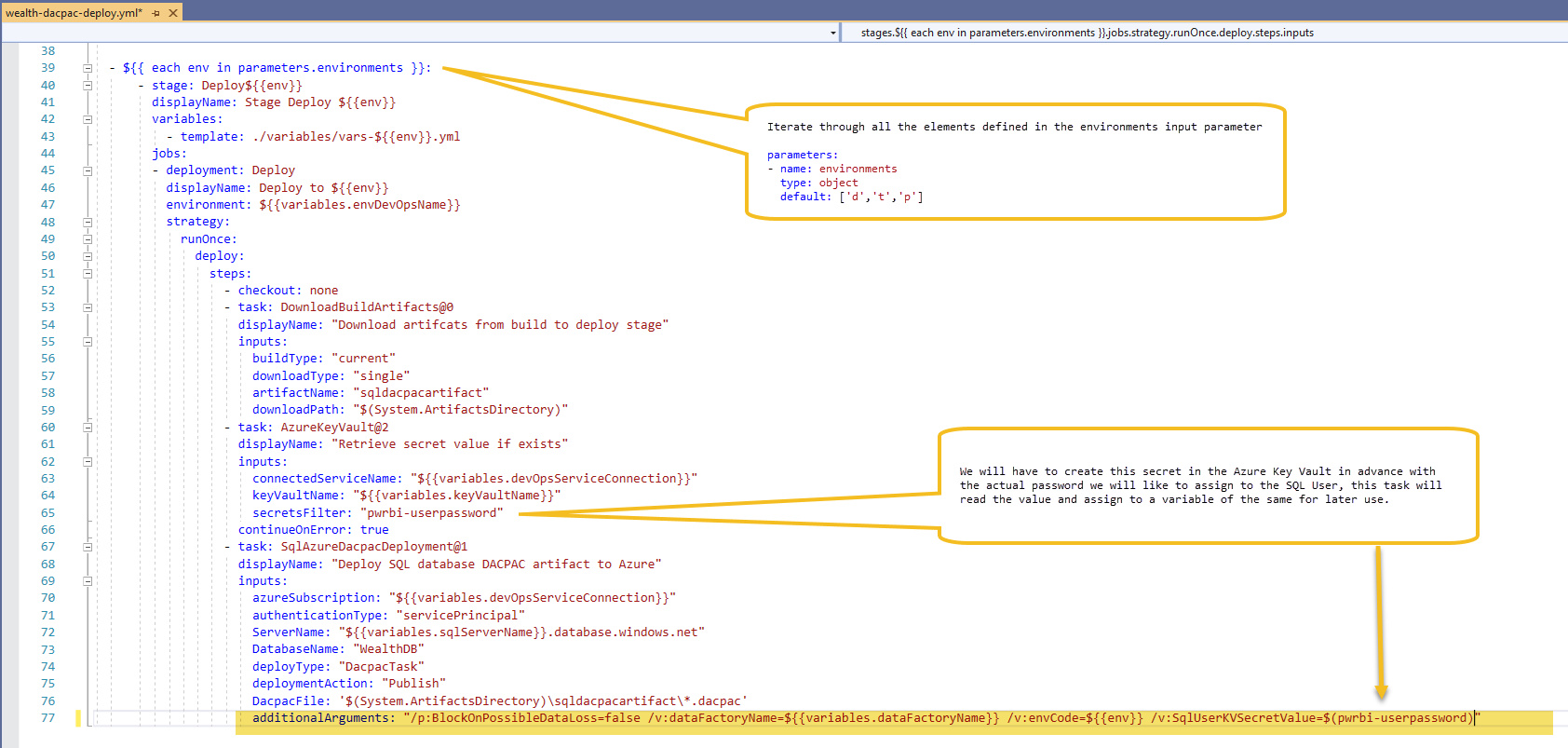

The second part of the code it's bit more complex as it uses an iterator and variables.

The pipelines also uses variable files, this way, for each environment, we need to create a new variable file with a basic naming convention as shown

I'm assuming all of reference resources in the variables exists within your Azure subscription in one or many resource groups, if so, last step of to start the pipeline, hopefully, everything will work and you will be one happy developer!

Conclusion

In this article, we've explored the seamless process of provisioning security configurations in a database using Visual Studio Database Projects and DACPACs. For readers who are new to Visual Studio, DevOps practices, or related concepts, please be aware that this article assumes a basic familiarity with these topics. To gain a better understanding of these foundational concepts, we recommend referring to our upcoming articles, which will cover them in detail. In this article, we've demonstrated how to automate the deployment of these configurations through DevOps YAML pipelines. By following these practices, you can enhance the security and efficiency of your database management, ensuring your data remains safe and accessible to authorized users.

Call to Action

Now that you've learned the process of database security provisioning and deployment automation, it's time to put this knowledge into practice. Start by implementing these techniques in your own projects and explore further possibilities for improving database management and security. Stay tuned for our next articles, which will provide comprehensive coverage of Visual Studio, DevOps practices, and related topics.