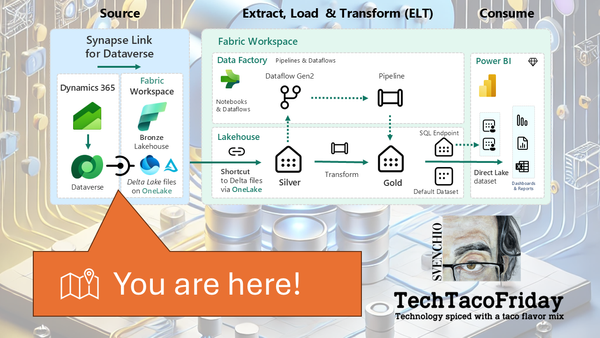

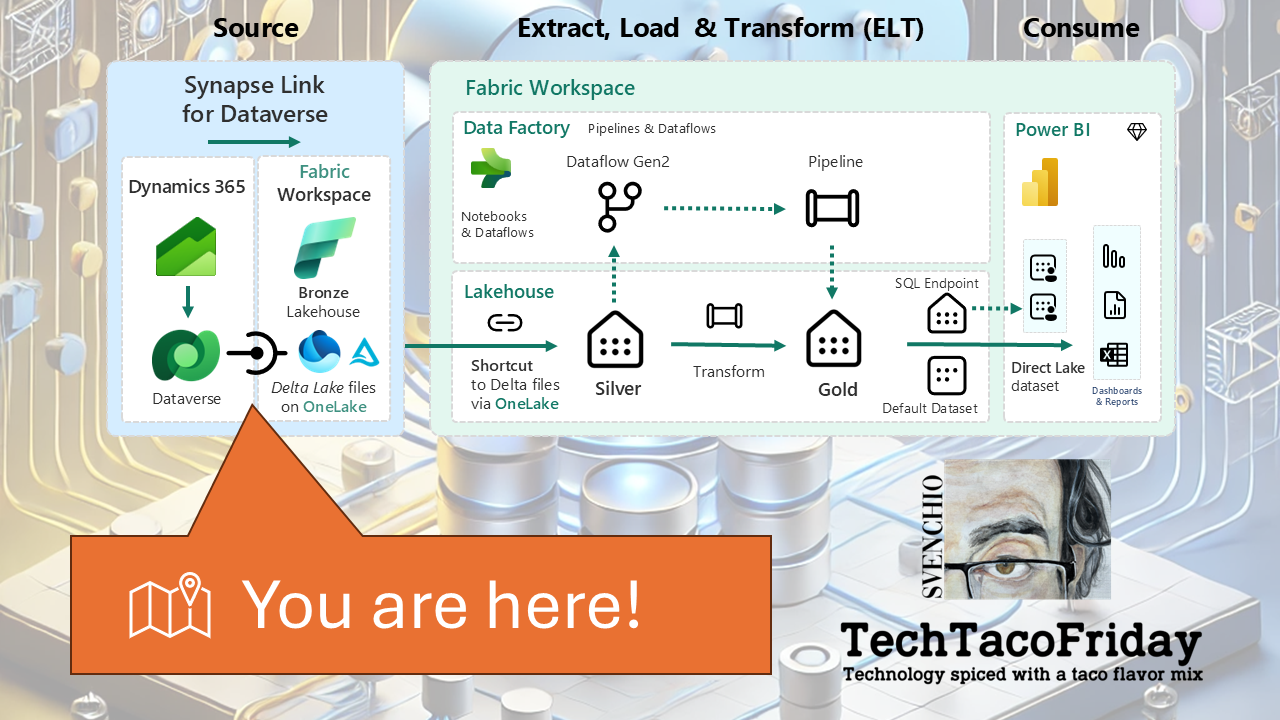

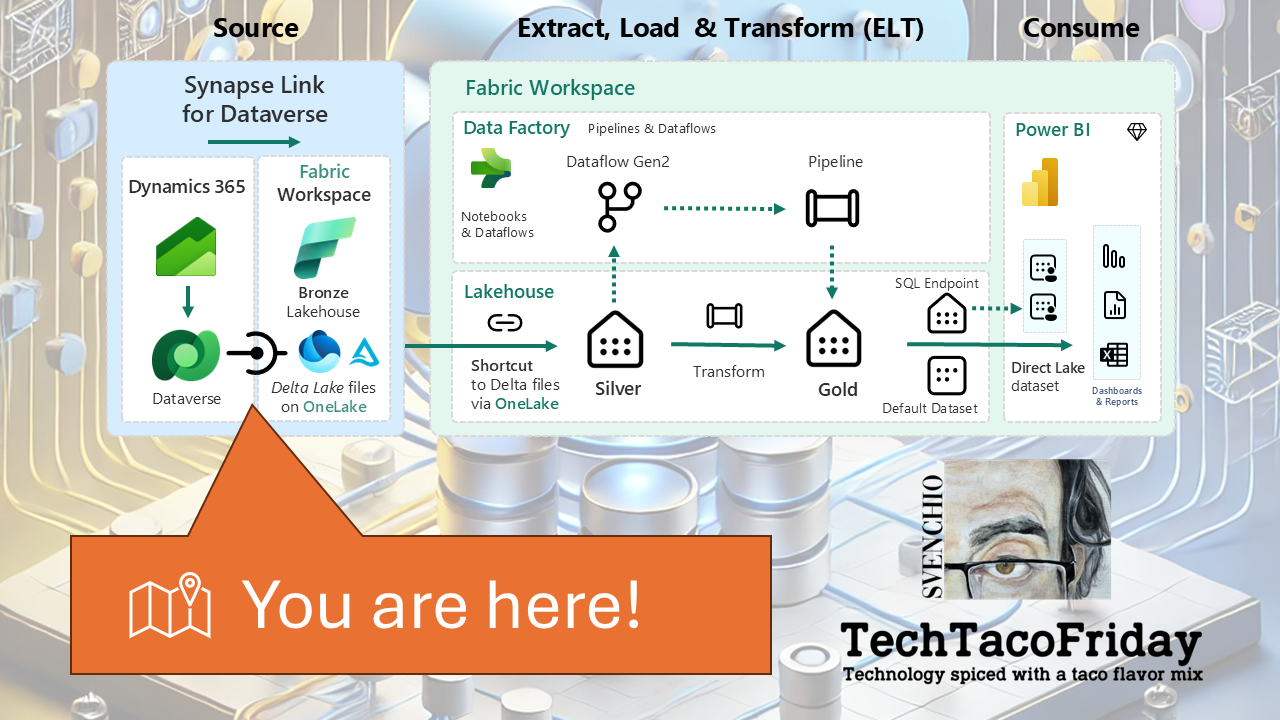

Dataverse Meets Fabric - Link via Azure Synapse Analytics

Learn how to integrate Dataverse with Microsoft Fabric using Azure Synapse Link. This step-by-step guide walks you through the setup using Bicep, YAML pipelines, and best practices for a scalable, Azure-native data pipeline.

Introduction

In the previous article, we compared the two official options for syncing Dataverse data into Microsoft Fabric. Now, it's time to roll up our sleeves and implement the "Link via Azure Synapse Analytics" approach.

I'll walk you through the entire process of integrating Dataverse with Microsoft Fabric the right way: with Infrastructure as Code. I’ve prepared everything you need using Bicep templates and a YAML pipeline, covering:

- Resource provisioning (Synapse, storage, permissions)

- Configuration of the Synapse Link from Power Apps

- Step to link the data into Microsoft Fabric for analytics

This article is Part 3 of the Dataverse Meets Fabric (Series) If you landed here first, you might want to check out the full series to get the broader context.

Assumptions & Scope

This article assumes that you already have access to a Dataverse environment, and either have the necessary knowledge or support to navigate basic configurations within Power Platform. While I’ll reference Dataverse—for example, when verifying the environment’s region—this article does not cover Dataverse fundamentals.

Likewise, the infrastructure deployment for this integration is fully automated using Bicep templates and orchestrated through a YAML-based Azure DevOps pipeline. While I will share both the code and pipeline used, I won’t be explaining either in depth here. If you need more context on these foundational elements, feel free to check out my earlier articles on these topics:

Pre-requisites

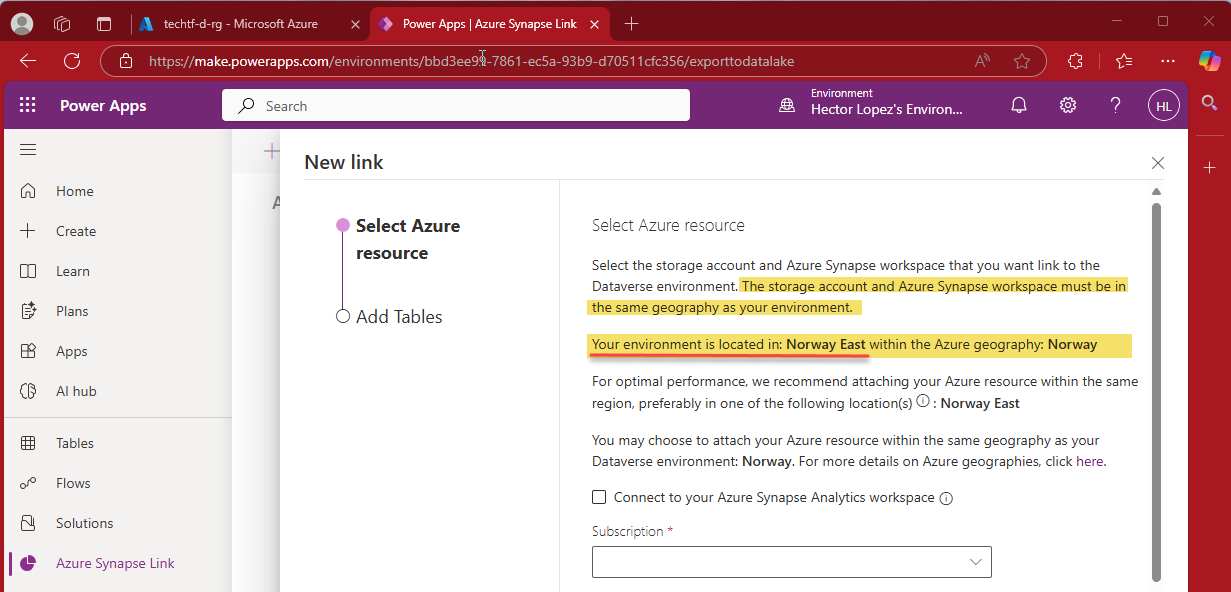

When integrating Dataverse with Microsoft Fabric using Azure Synapse Link, it's crucial to ensure that all components involved are located within the same Azure region.

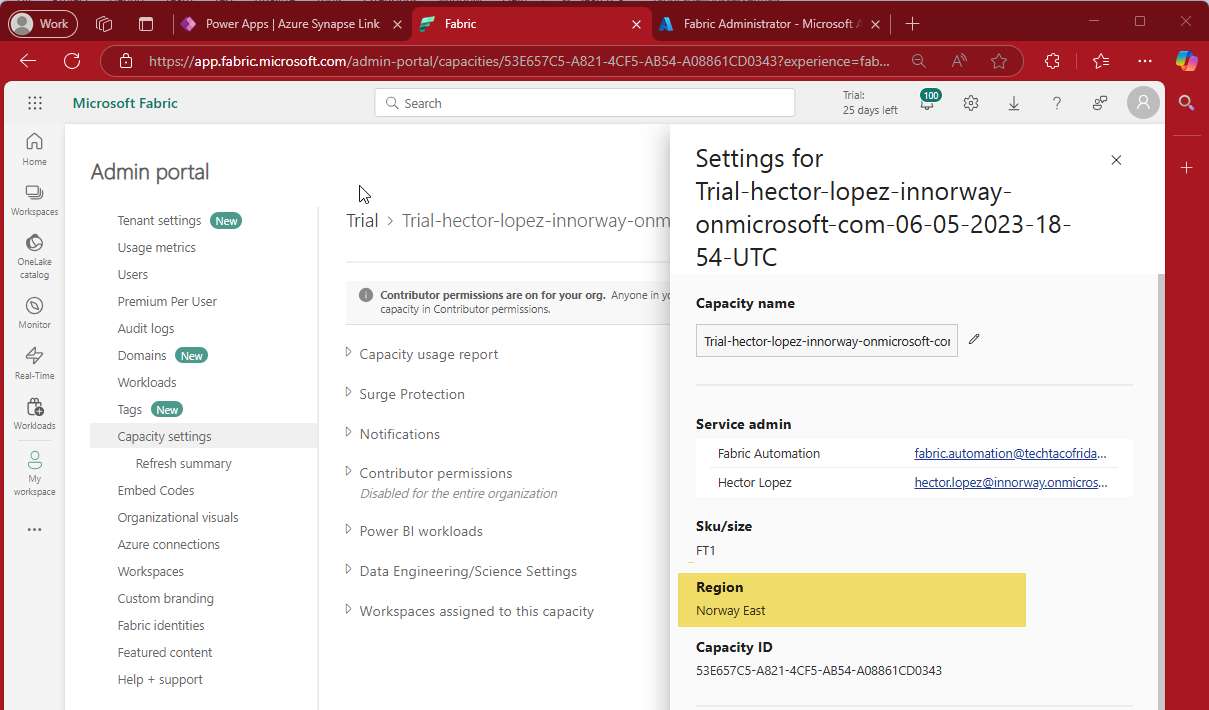

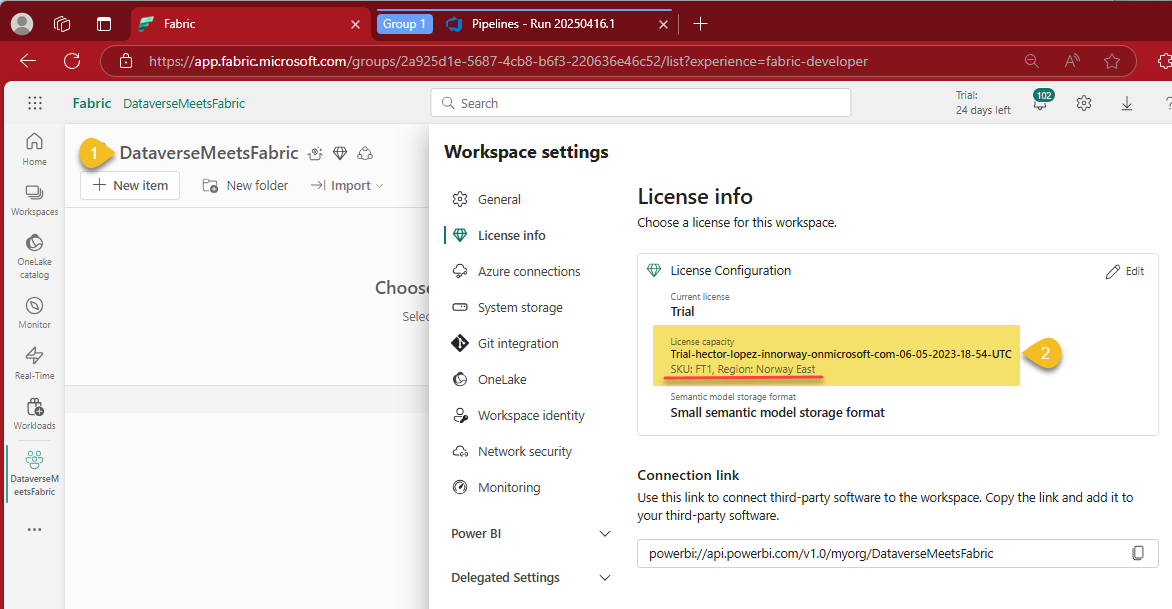

1 - Take note of your Fabric capacity region

From the Fabric Admin Portal, navigate to Capacity Settings and locate the capacity you plan to use. Click on it to open its settings, and take note of the region, as shown in the screenshot below.

2 - Take note of your Dataverse environment region

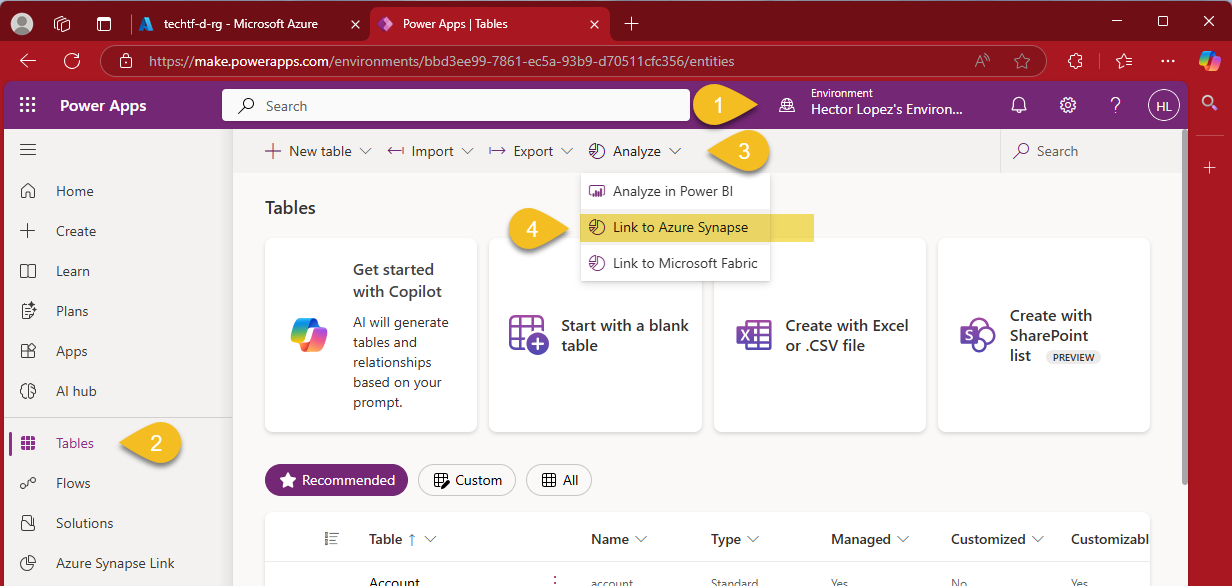

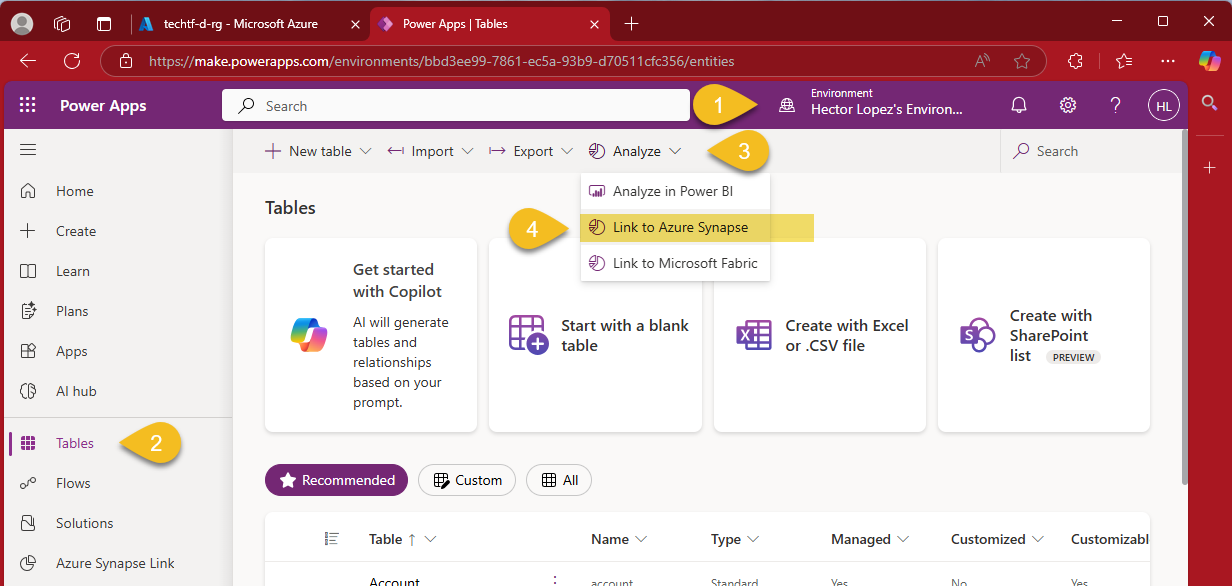

From your Power Apps maker portal, select the Environment (1), click on Tables (2), Analyze (3) and Link to Azure Synapse (4) as shown below

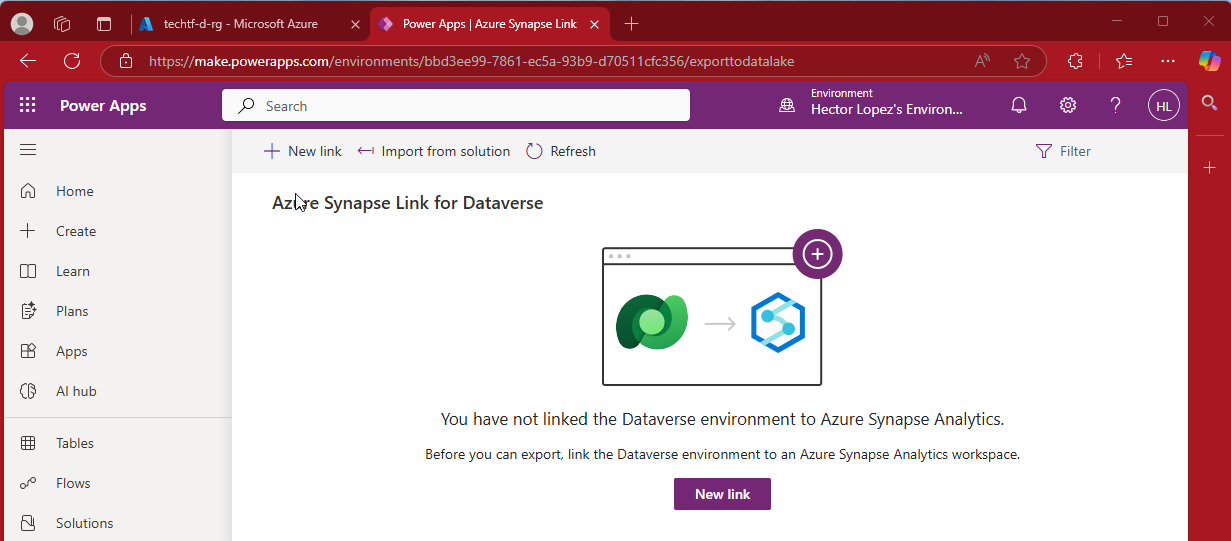

Click on the New link button

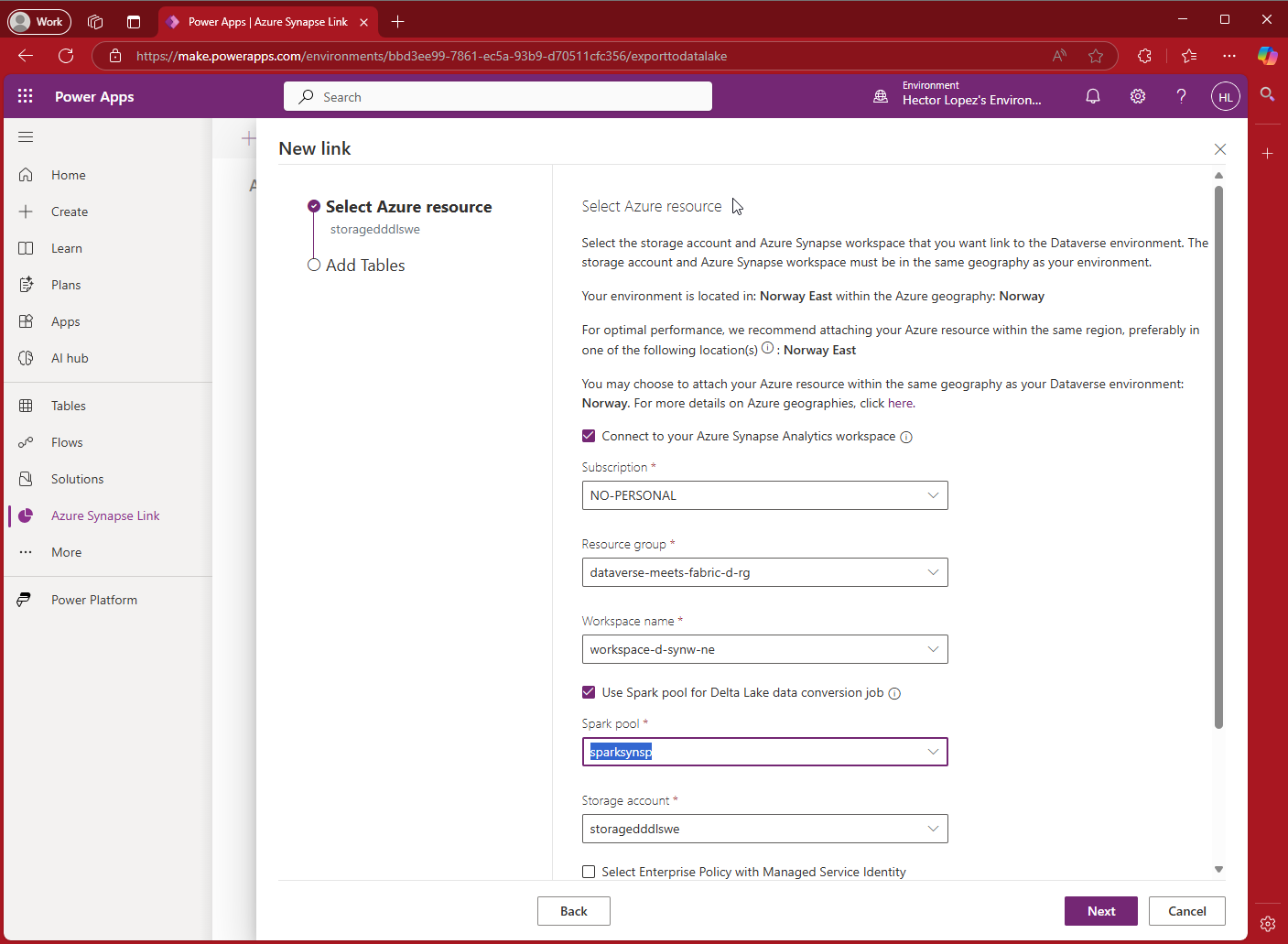

Take note of your Dataverse environment's location as shown in the image below and click Back or Cancel, for the time being, we just need to verify the region but will return to this later 😉

3 - Create Fabric Workspace

Assuming you have both a Fabric capacity and Dataverse environment on the same region, let's create a new Fabric Workspace (1) and assign it to the capacity (2)

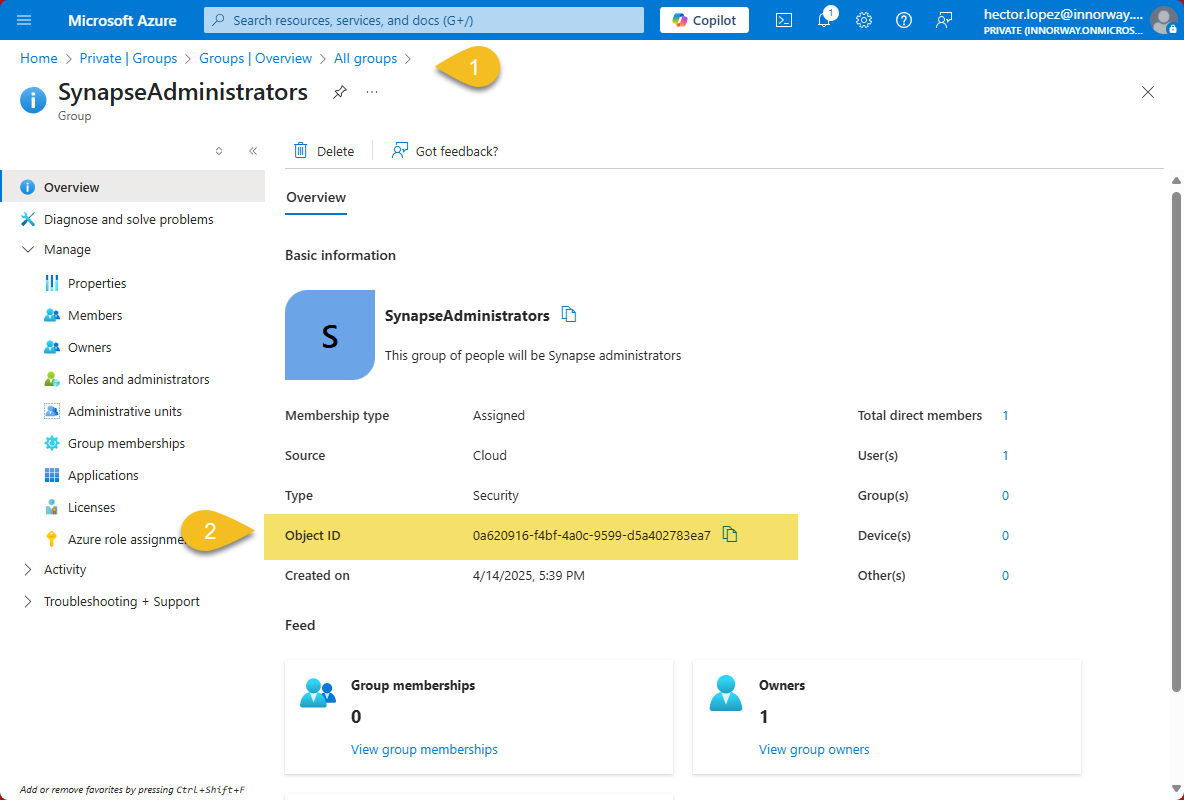

4 - Get designated Synapse Administrator's object ID

As shown in the example below, locate the user or group in Azure Entra ID (1) that you want to assign as the Synapse Administrator, and take note of their Object ID (2)—we’ll use it shortly 😉

Procedure

If your reached this point, you have everything you need to proceed 😁🤞

1 - Provisioning infrastructure

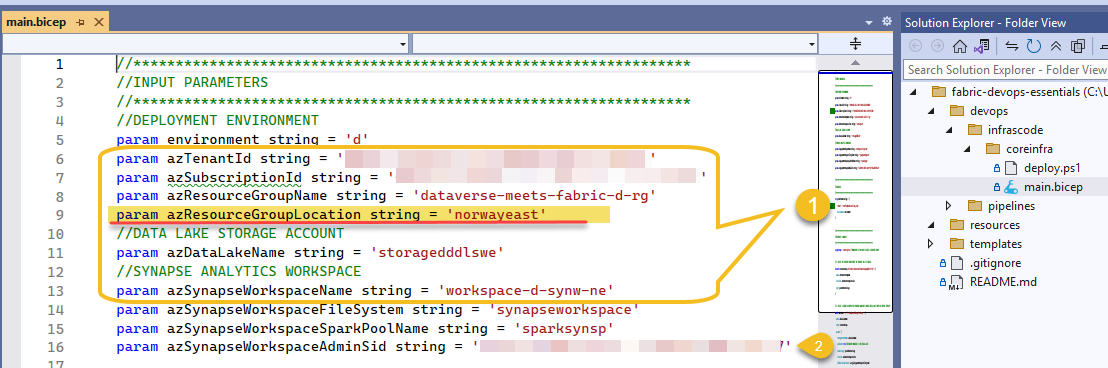

1.1 - Get the code. This repo/branch contains both the BICEP and a YAML-base DevOps pipeline.

1.2 - Configure your variables. Corresponding to your Azure environment (e.g. Tenant & Subscription) and adjust the names and the location for your resources (1), variable azSynapseWorkspaceAdminSid (2) should have the designated Synapse Administrator's object ID obtained in the pre-requisites.

1.3 - Create Synapse Infrastructure. Create and run the pipeline, if you step-by-step instruction, refer to my prev. article Resilient Azure DevOps YAML Pipeline

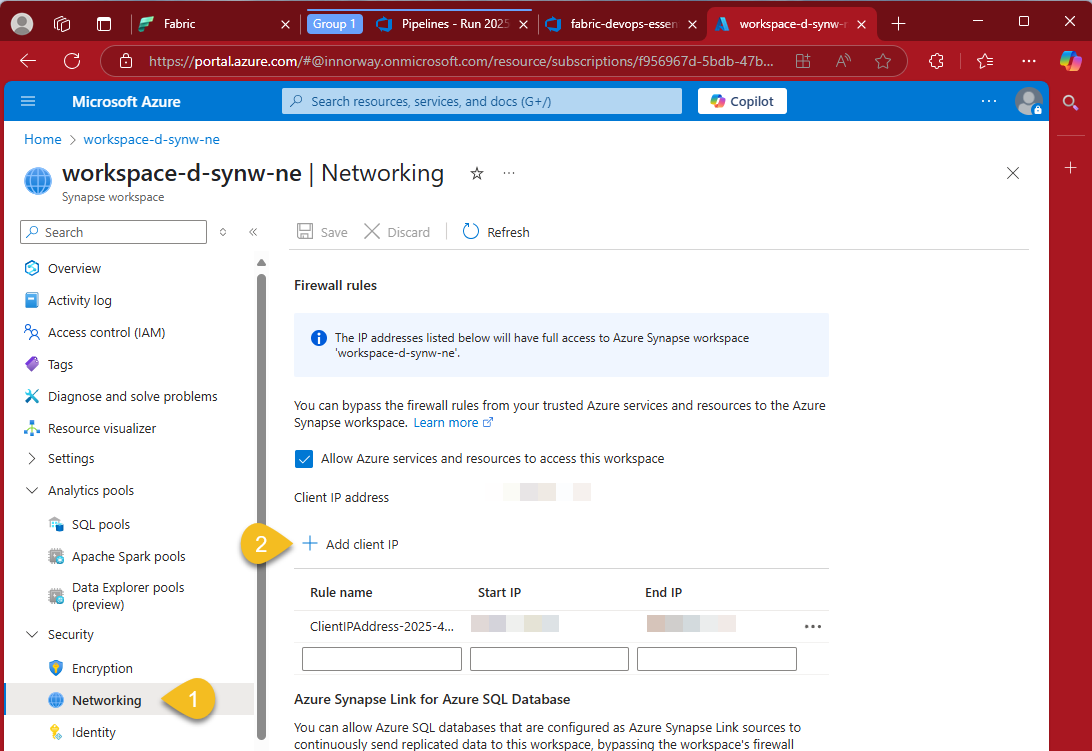

1.4 - Add your Client/Local IP address to the firewall. Go to your newly crated Synapse Analytics workspace, from Security > Networking (1) add your Client IP address (2) and Save

2 - Create Azure Synapse Link

Let's move away from Azure into our Power Apps maker portal, select the Environment (1), click on Tables (2), Analyze (3) and Link to Azure Synapse (4) as shown below

Configure using the Azure resources previously created by the IaC pipeline

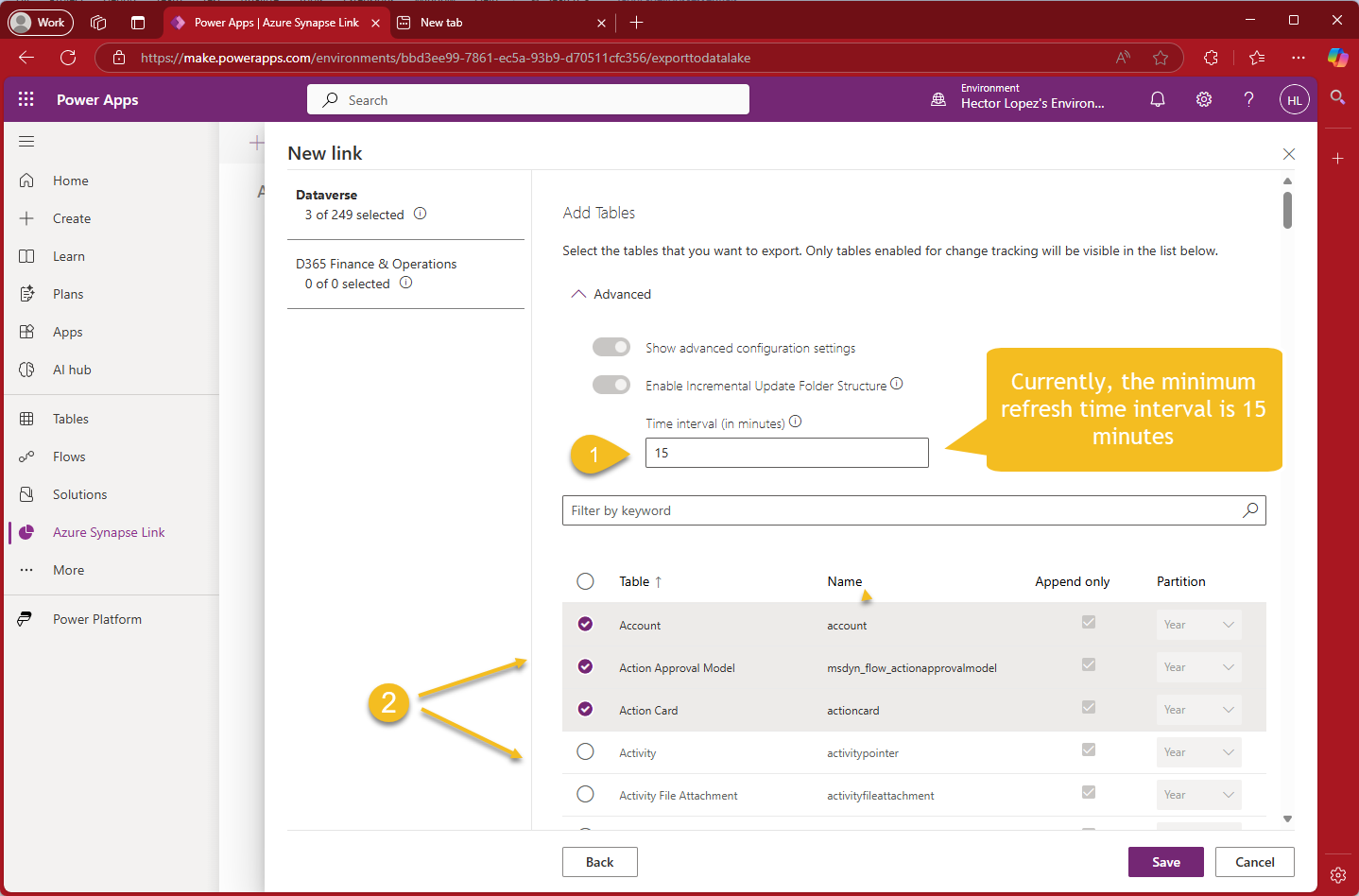

Configure the Refresh time interval (1) and the tables you would like to Link (2) and click Save

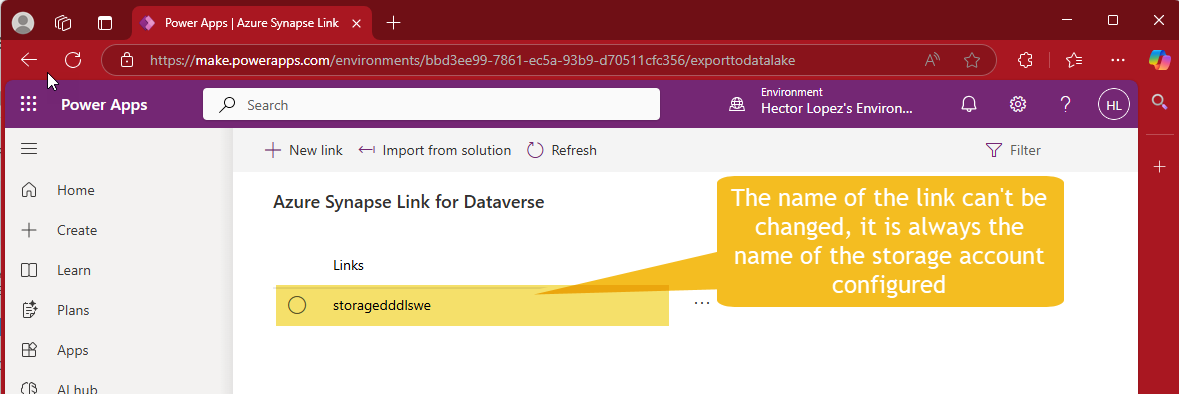

If everything works well, just should be able to see the Synapse link created 😎🍻

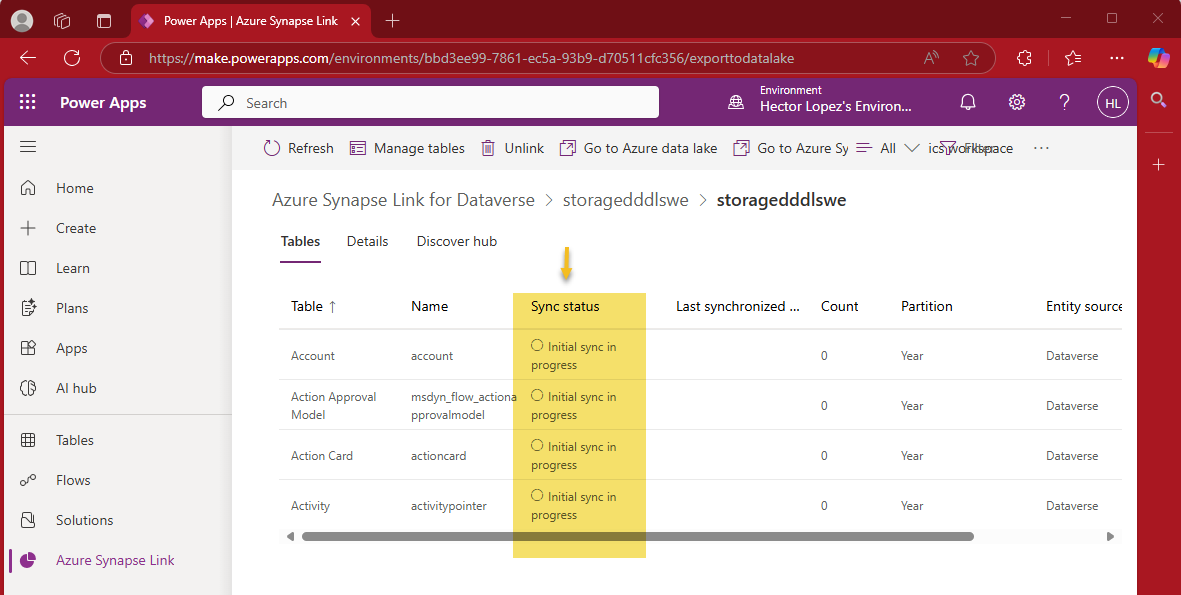

Click on the "More commands" (dots next to the link's name) and Tables, monitor the Sync status, wait until all tables sync status is Active😅 before proceeding to the final step.

3 - Link to Microsoft Fabric Workspace

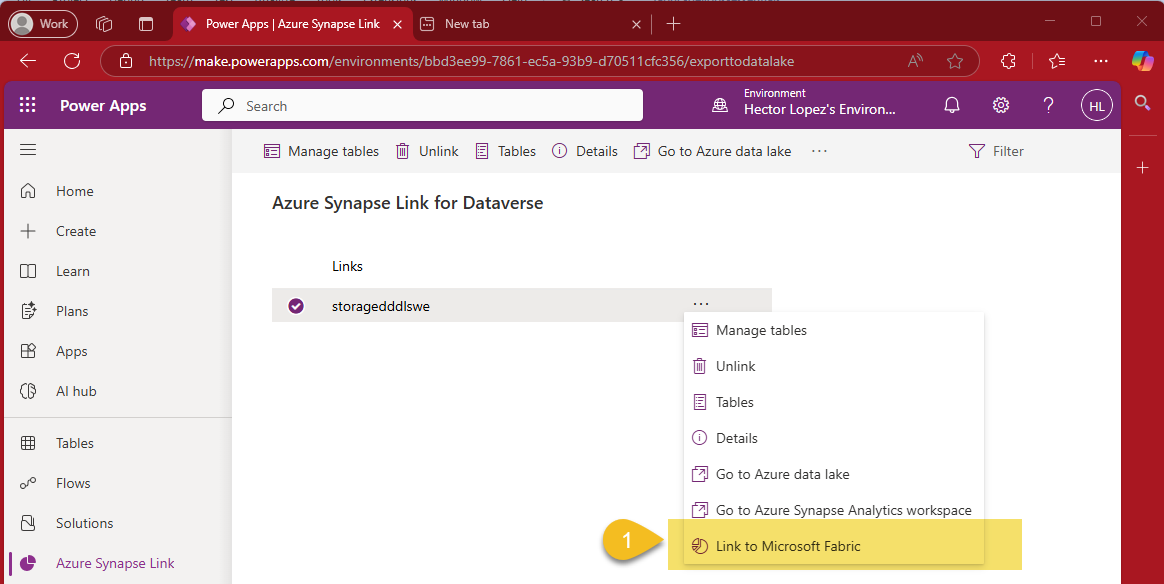

Final step... click on the "More commands" and Link to Microsoft Fabric (1)

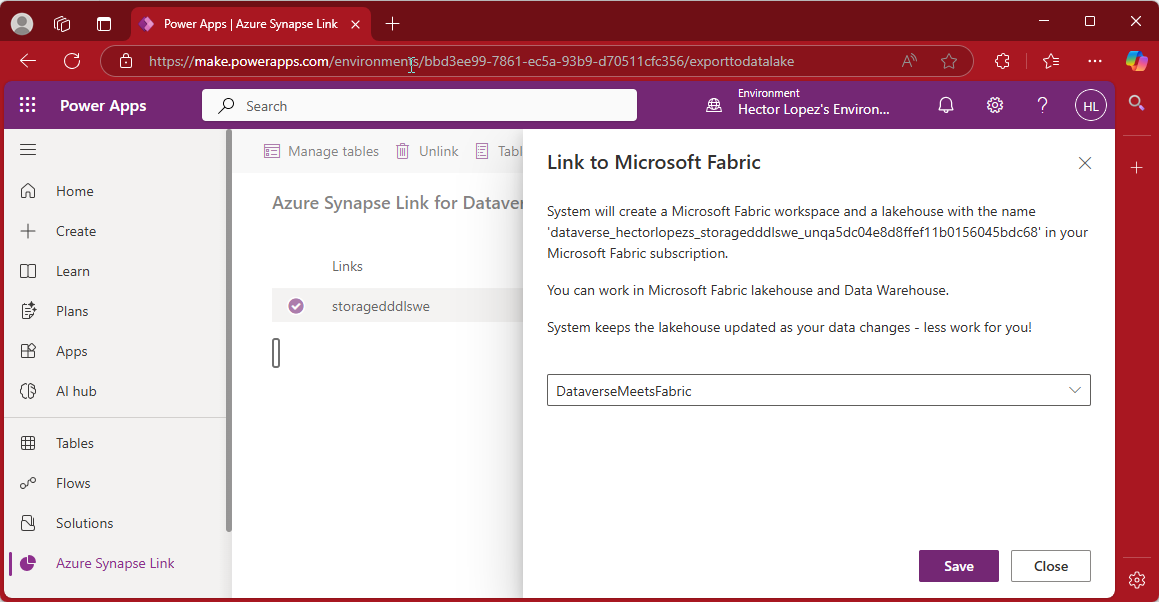

Nothing particular on this next step, just pick the Fabric workspace created on the pre-requisites section and click Save... the interesting part starts after this 😉

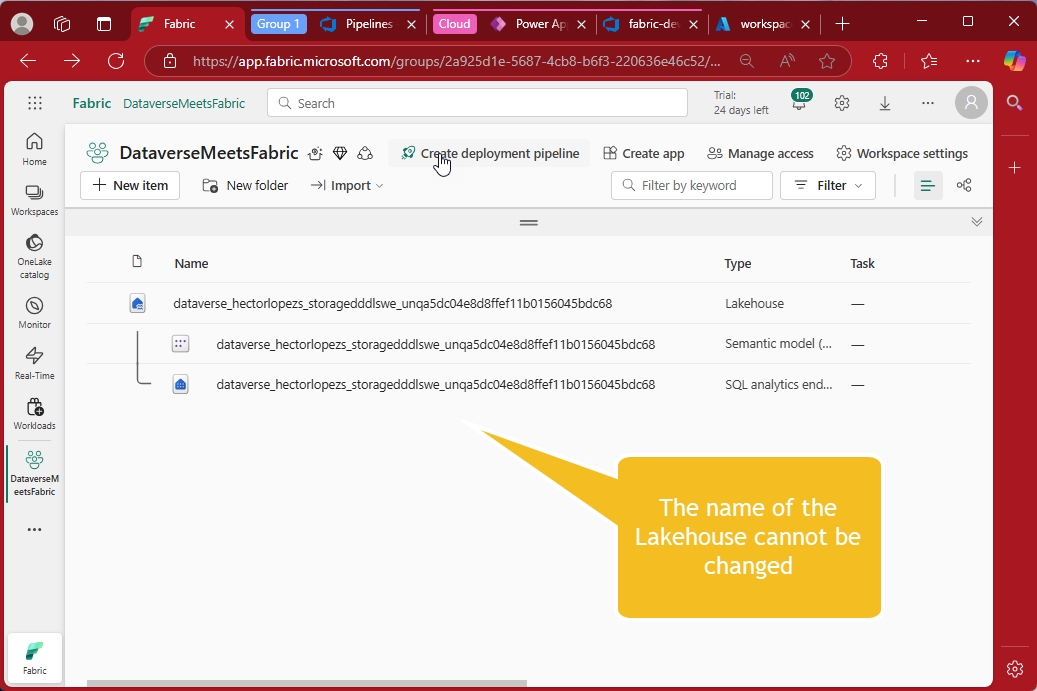

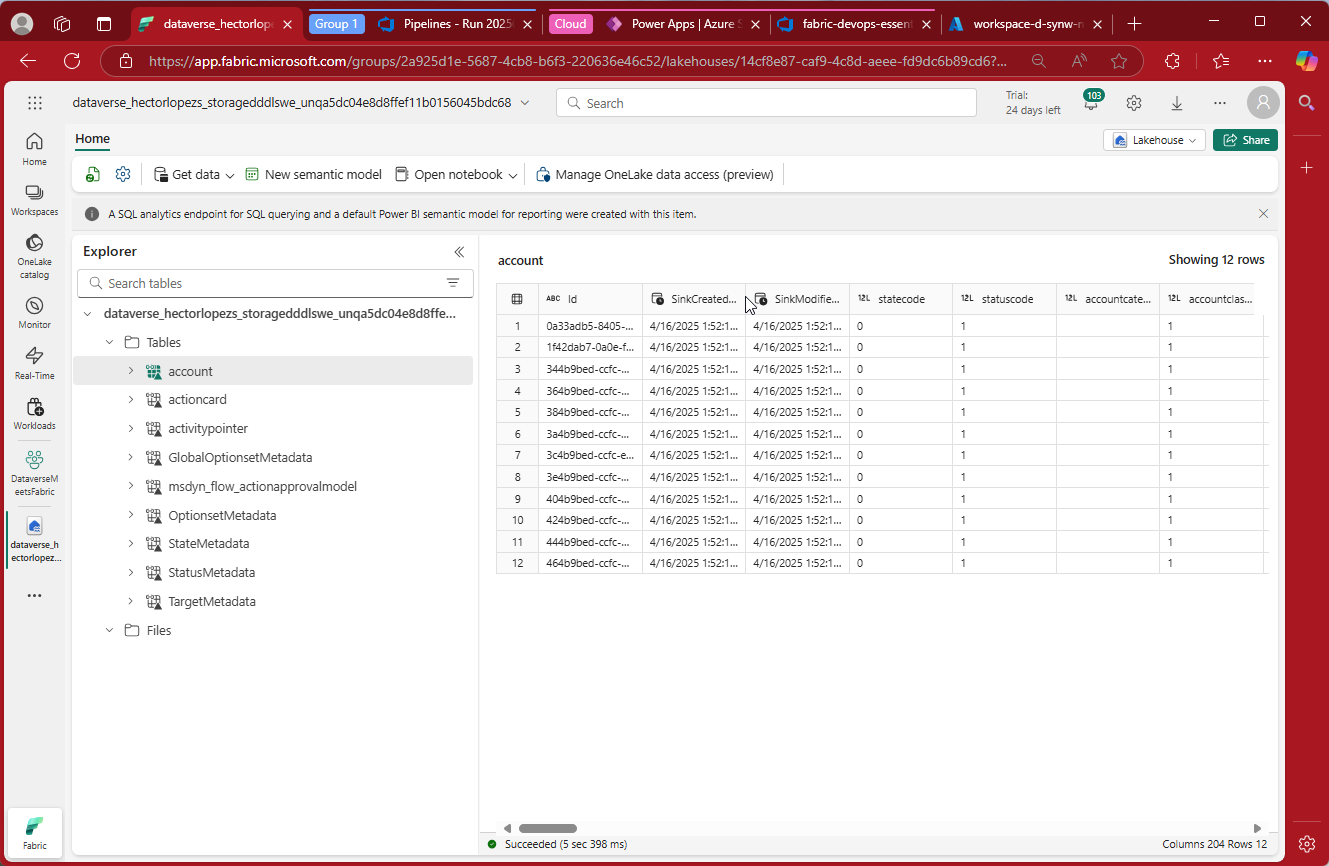

Go back to your Fabric workspace and check it out, a brand new Lakehouse receiving the data from your Dataverse at the refresh interval configured during the creation of the Synapase link 🙌

All the is left is to wait a bit for the data sync completes and we are ready to consume the data from the Lakehouse 🥳

Wrapping up!

We’ve now successfully completed the setup of a Dataverse-to-Fabric data pipeline via Azure Synapse Link—automated with Infrastructure as Code, orchestrated through a YAML pipeline, and wired up to Microsoft Fabric with minimal manual effort.

This approach offers granular control, table-level selection, and integration flexibility, making it ideal for organizations already invested in Azure services or with more advanced data engineering needs.

But it’s not the only option.

In the next article, we’ll explore the Link to Fabric (Direct) method—an even more seamless, Fabric-native integration that auto-syncs all Dataverse tables in Delta Lake format. It may not offer the same level of control, but it makes up for it with speed and simplicity.

So whether you need full control or fast results—Fabric has you covered.

Stay tuned for Part 4 of the Dataverse Meets Fabric series!