Version-control & CI/CD your Power BI reports: Using DevOps Git Repos and Pipelines

I'll show you how to orchestrated thru Azure DevOps pipelines a fully functional CI/CD cycle for your Power BI reports.

Setting the stage

In today's data-driven world, the importance of version control and seamless deployment processes cannot be overstated, especially for Power BI users. However, not everyone has access to Premium capacity-enabled workspaces, which can limit the options for version control and deployment. Fear not, for there's a solution at hand! Even without Premium capacity-enabled workspaces, you can achieve robust version control and streamline your deployment process through Azure DevOps pipelines. Let's dive in and explore how you can orchestrate a fully functional CI/CD cycle for your Power BI reports.

Solution

On the next part of the article, I'll explain just the most relevant parts of the code

Setting report's backend DB and CI/CD Infrastructure

I'm creating report's back-end Azure SQL Server and Database using BICEP scripts

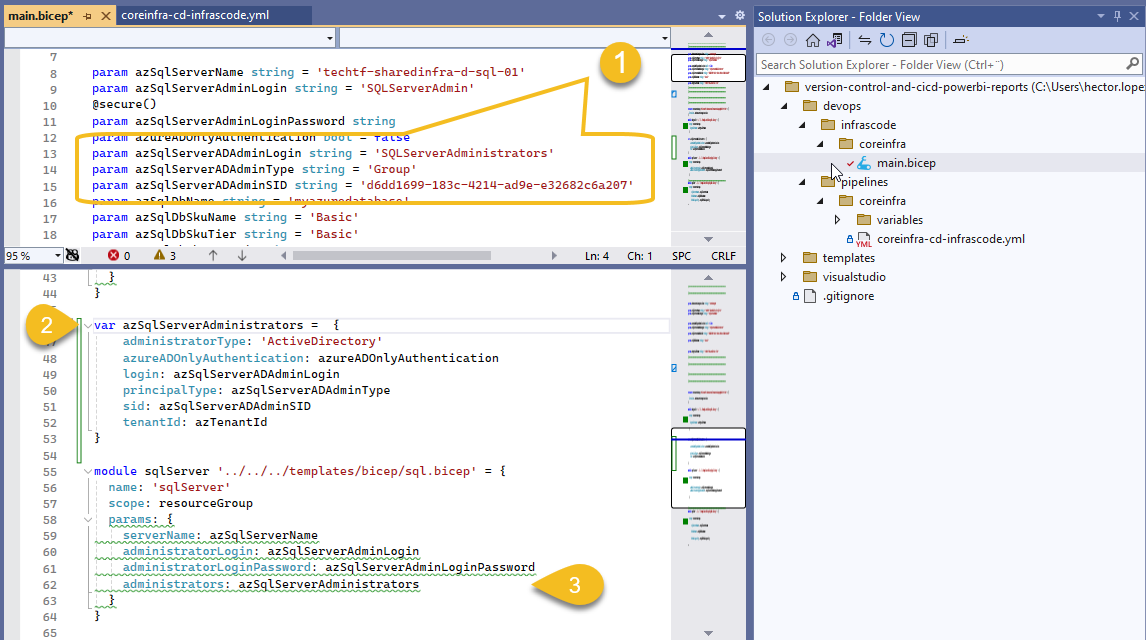

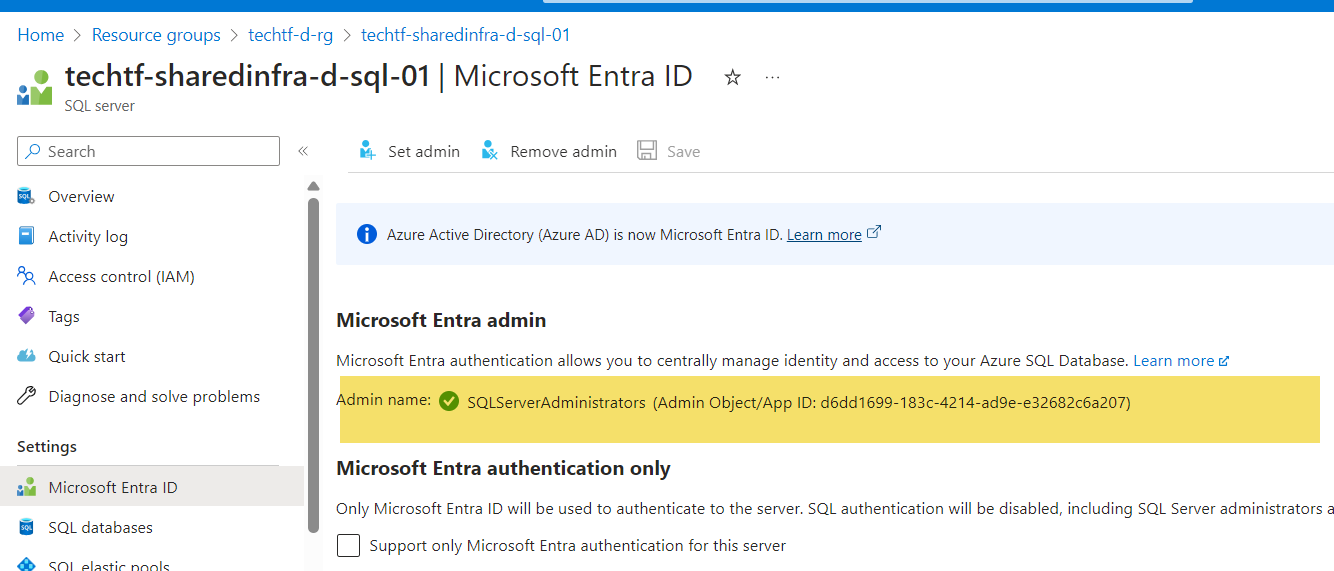

In the example shared in the code, I'm using IaC to create the report's back-end database along it's with the SQL Server Logic server, notice that I'm assigning an Entra's ID security group (1) to be the server's Administrator (2)(3).

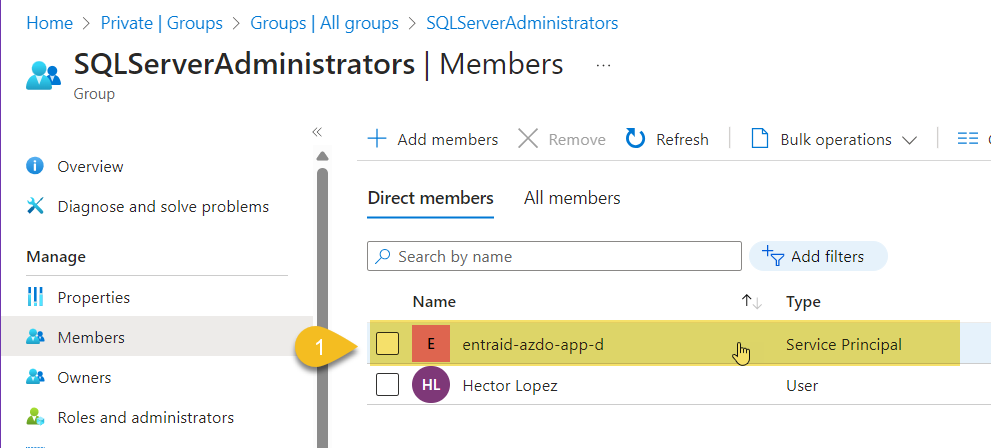

I created group SQLServerAdministrators manually and added as member the security principal representing my AzDO Service Connection (1)

The result is that SQL Logic server admin is the security group SQLServerAdministrators and by proxy, pipeline via its service connection has admin privileges to deploy the DACPAC

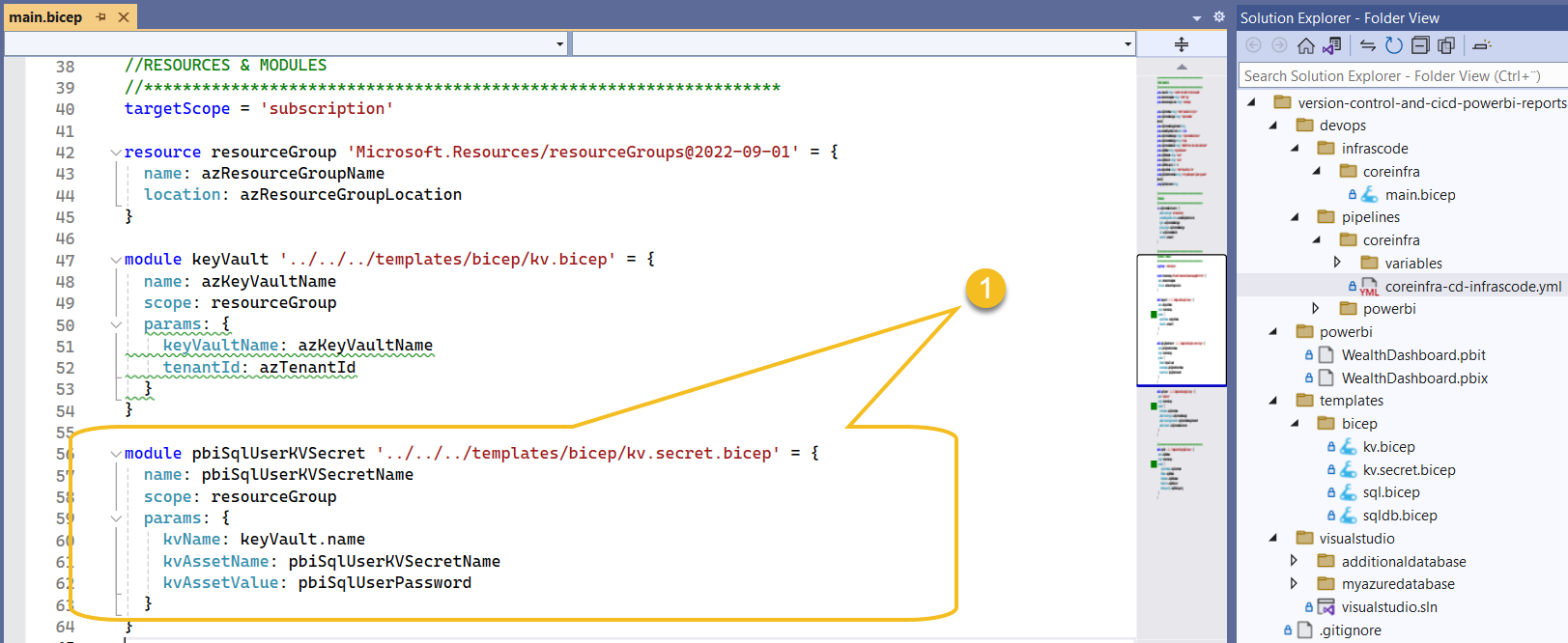

I'm also creating a Key Vault Secret (1) containing the password for a SQL User we will be using to authenticate the report towards the Database.

Check supporting article:

- Infrastructure-as-code made easy with Bicep language : "In this article, we will explore what Bicep is, how it works, and how it can help you simplify and automate your Azure deployments"

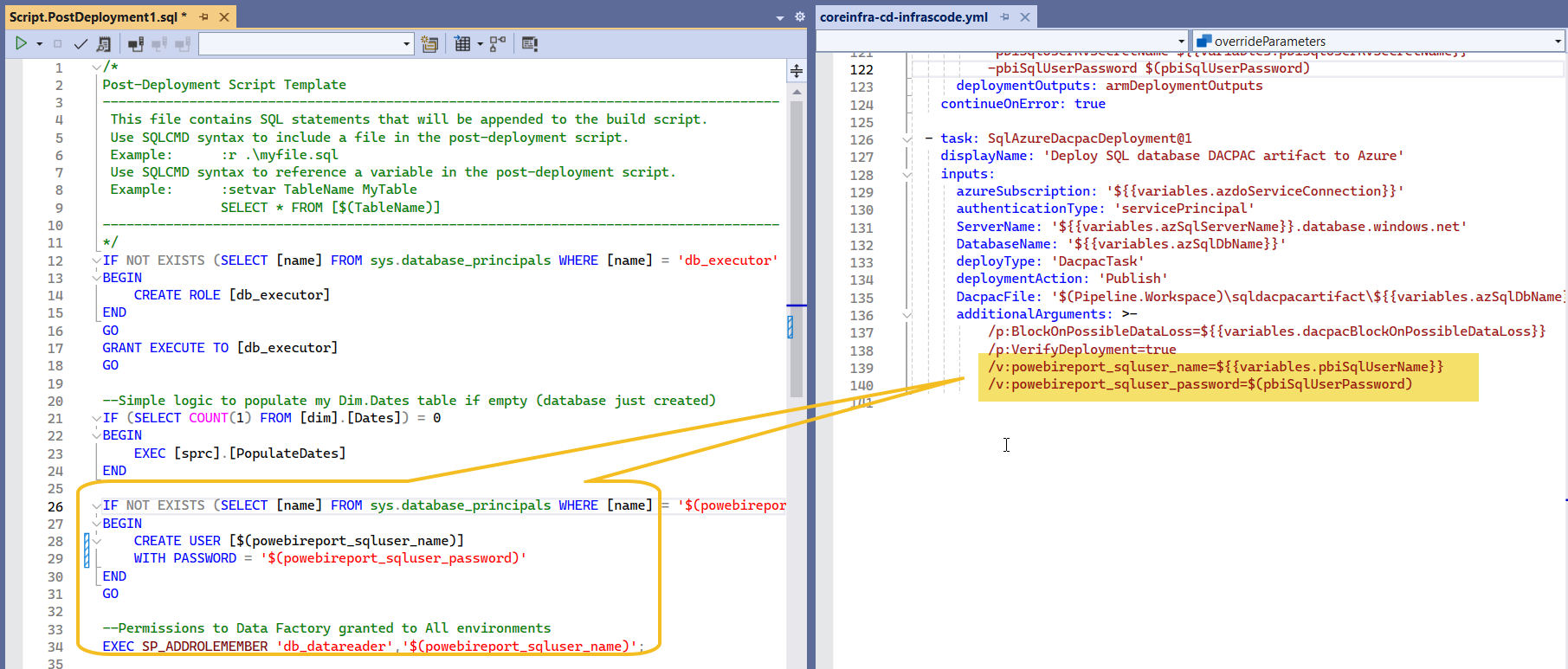

Once we created our Azure Infrastructure, we will be deploying the Database schema (DACPAC) generated from our Visual Studio Database Project and grant db_datareader role to the Power BI report SQL User.

Check supporting articles:

- Create a Visual Studio Database project, host in DevOps Git-repo and deploy with pipelines: "Learn to create a Visual Studio Database project, host it in a DevOps Git repo, and deploy it using Azure DevOps pipelines"

- Provisioning Database Security thru Visual Studio Database Projects and Deploying via DevOps YAML Pipelines: "The purpose of this article is to explain how access to an SQL Database can be configured from a Visual Studio (VS) Project in order to be deployed along with a DACPAC thru DevOps pipelines"

Configure Azure DevOps Organization

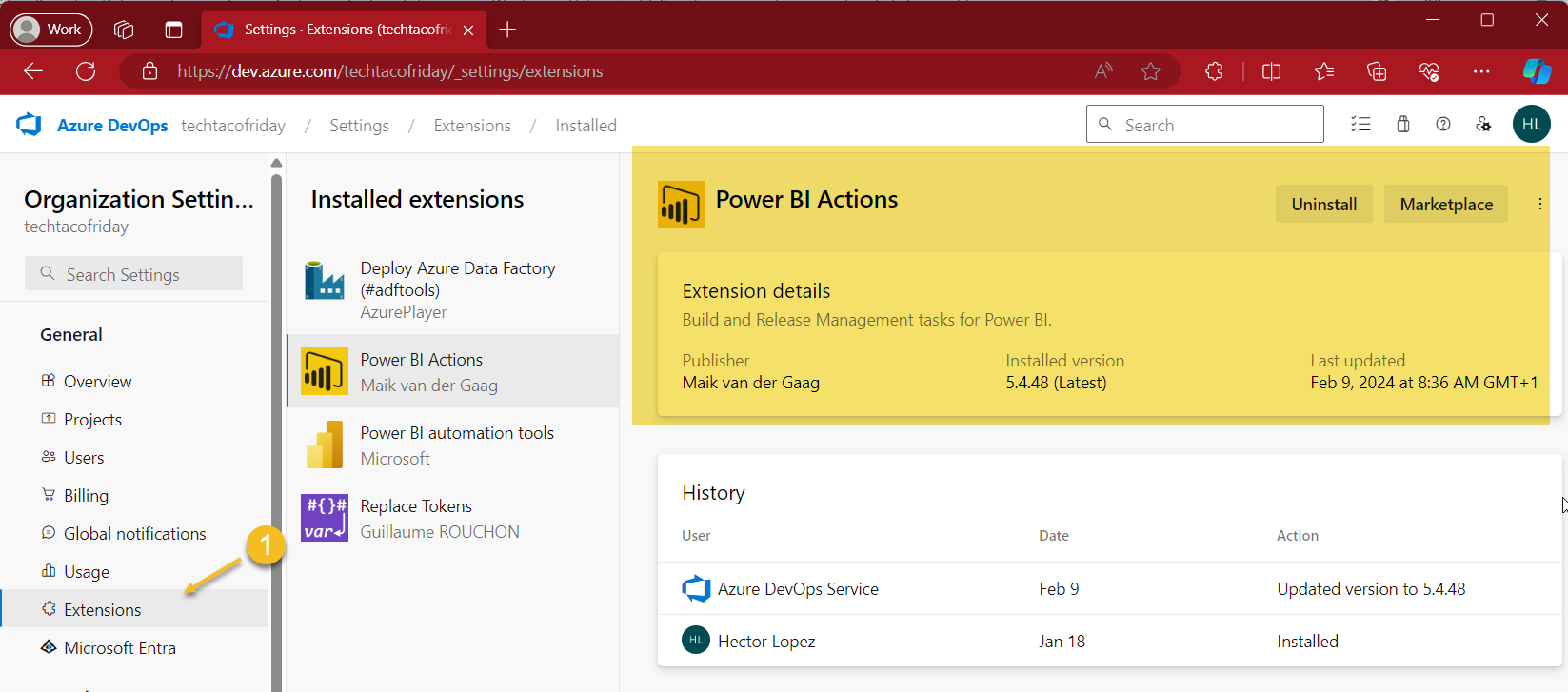

Go to your AzDO Organization Settings > Extensions and install the extension Power BI Actions by Maik van der Gaag, you can get for free!

Power BI Actions is a task for Azure Pipelines. With this tasks you can automate specific tasks for Power BI.

Configure Azure DevOps Organization/Project Service Connection

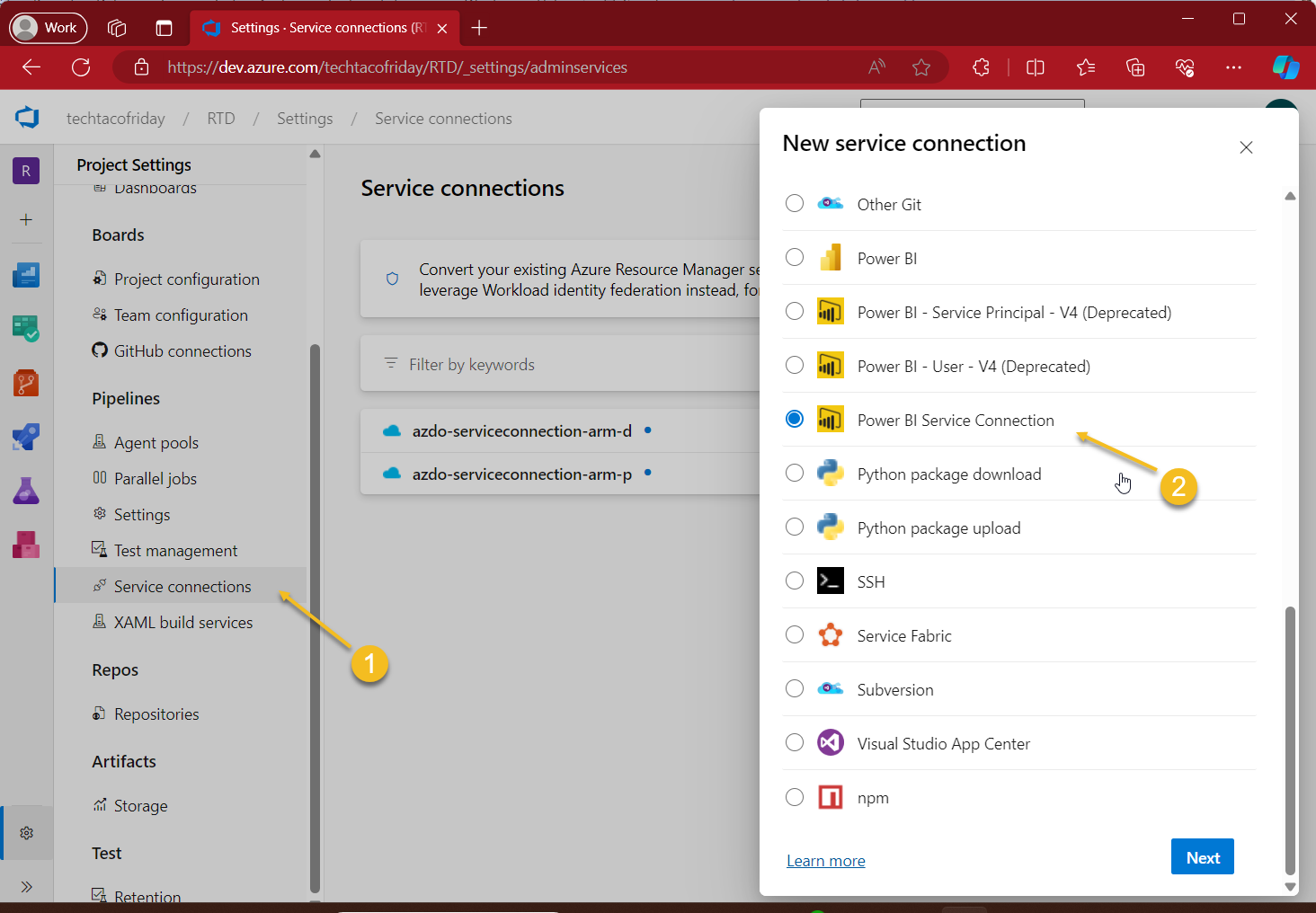

Next, we will need to add new Service Connections, from your AzDO Project Settings > Service Connections (1), add a new Power BI Service Connection (2)

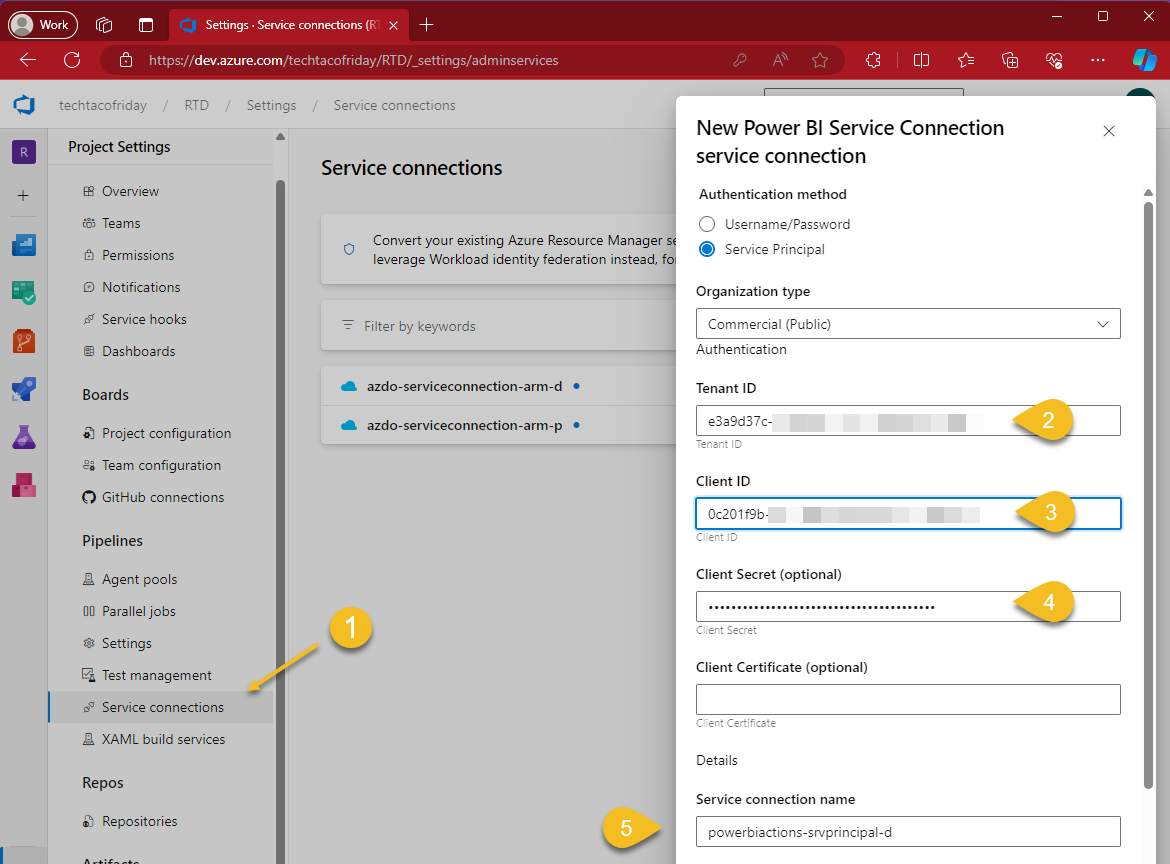

Configure your Service Connection with an Entra ID Application Tenant ID (2), Client ID (aka Application ID)(3) and app's secret created for this service connection (4); name the service connection (5) following your defined naming conventions and create.

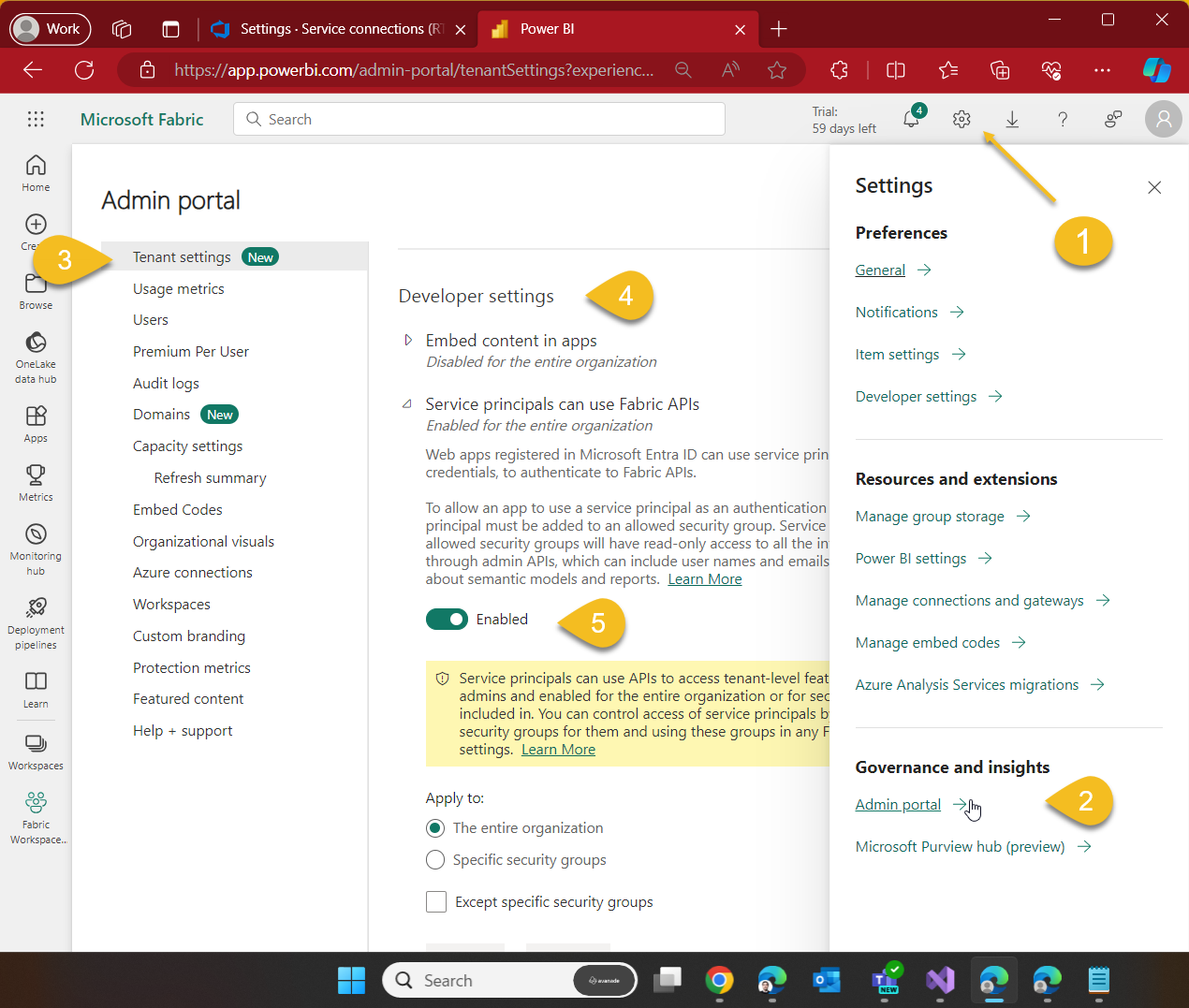

Allowing Service Principals to use Fabric API

Go to your Power BI Cloud service, click on Settings (1) > Admin Portal (2) and from Tenant settings (3) scroll down until you locate Developer settings section (4), enable the toggle switch (5) for Service principals can use Fabric API's

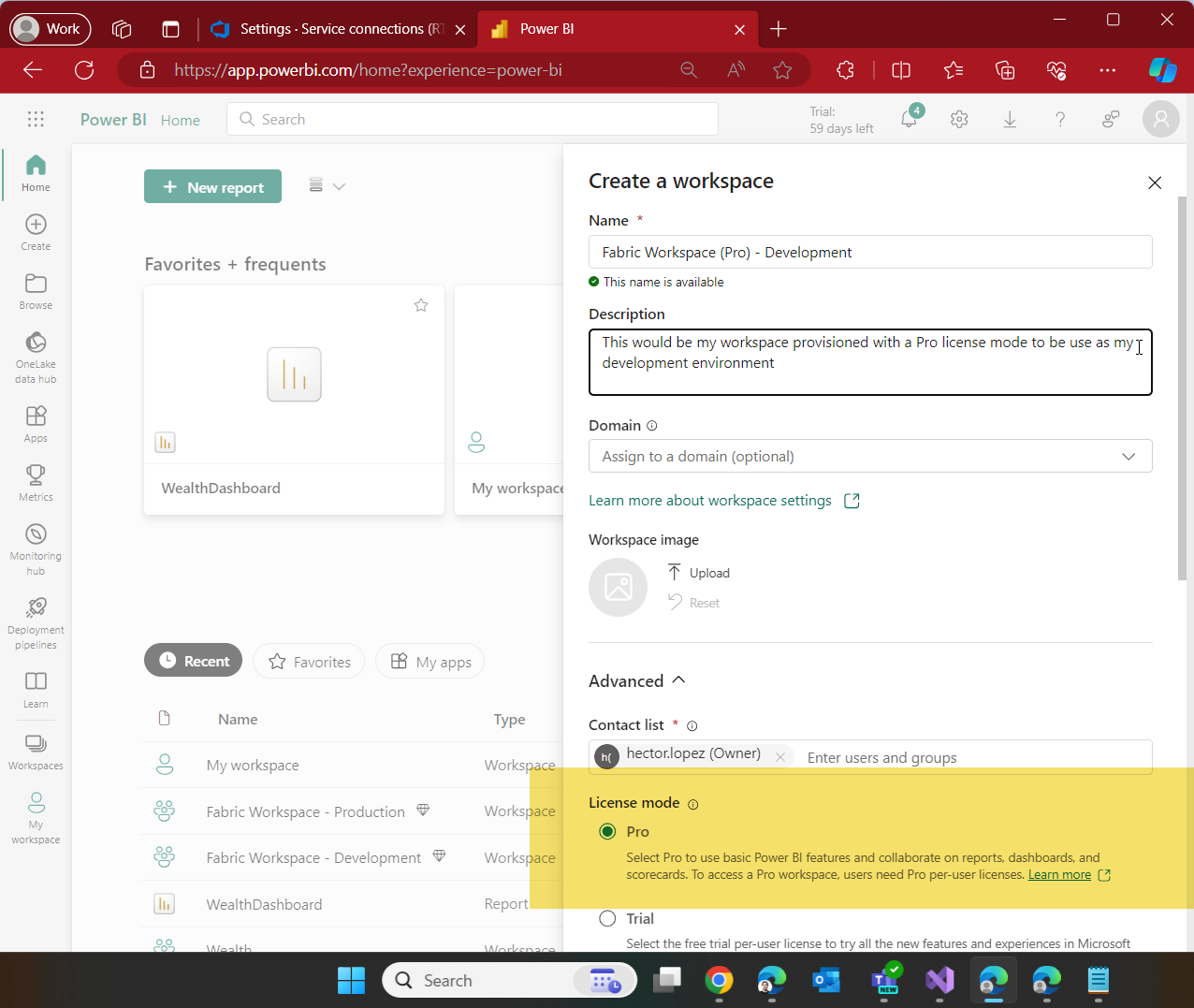

Create Fabric workspaces for Dev and Production

Create workspaces, one for your Development environment as shown below and as many others depending on your production release requirements, perhaps you need two more, one UAT that needs to be signed-off before going to Production? Up to you ... in the example I'm sharing with you, I will just add one more, Production.

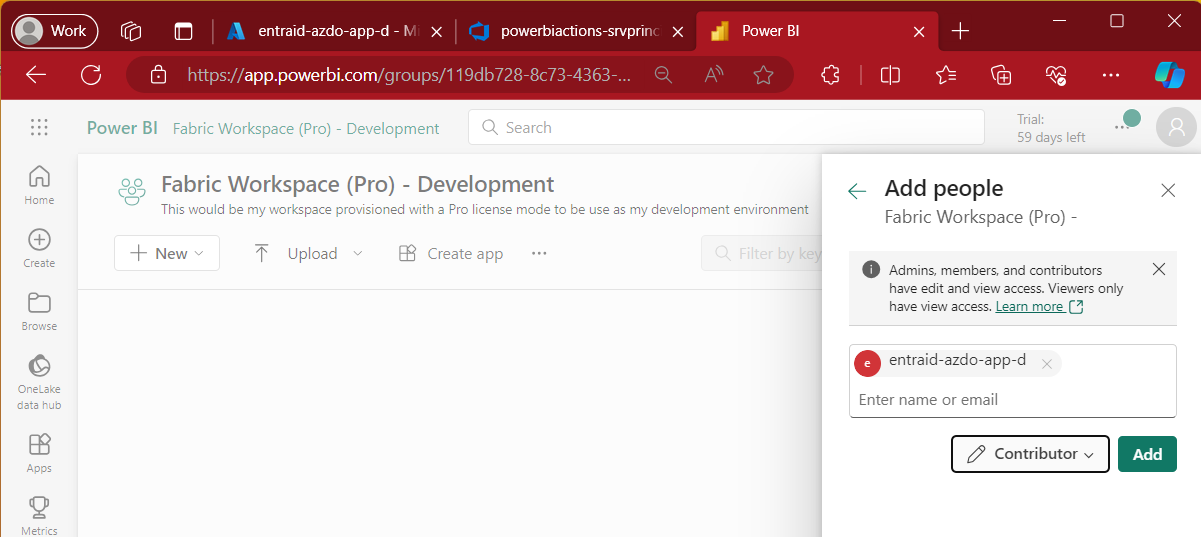

Grant Service Connection Security principal access to Fabric Workspaces

Remember that I told you to take note of the Entra's ID Application use to configure your AzDO Service Connection? Go to the Workspace access and grant the Application Contributor role.

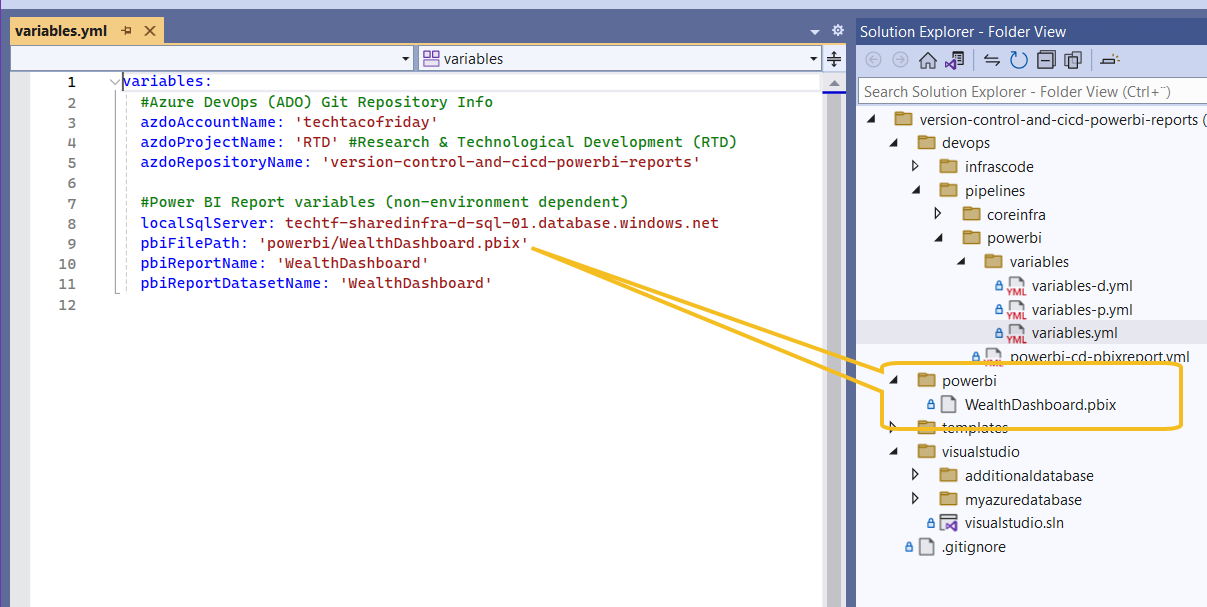

Including the PBIX file

At this point, I think we should have all the pieces in place, next is to commit/push Power BI report's file (.pbix) to our repository

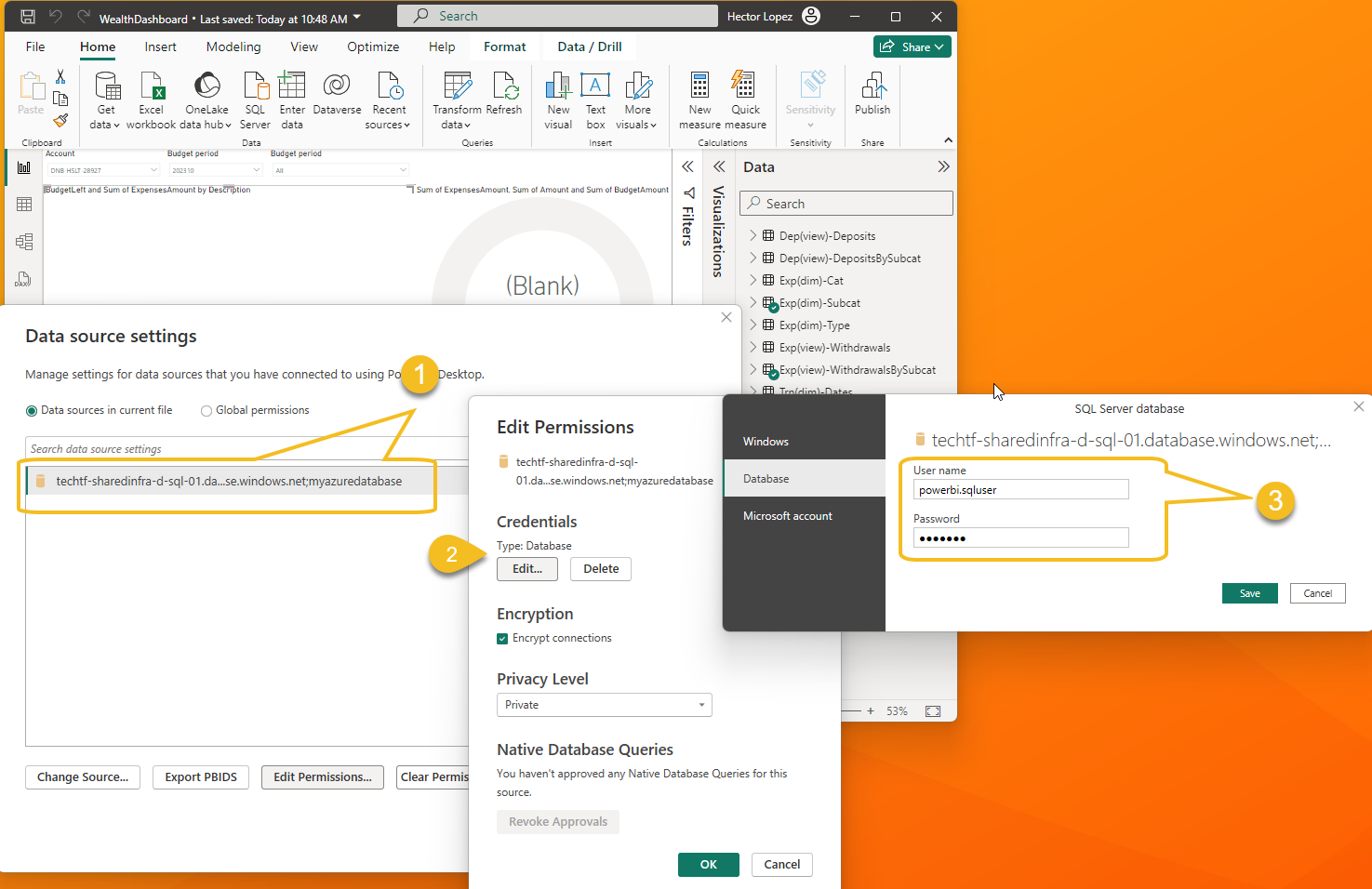

Image below shows my local report opened from Power BI Desktop, go to Data source settings you will see there's just one connection to a SQL Server/Database (1), credentials are of Database type (2), that means, we will connect via a SQL User & Password (3)

Commit & push report's file (.pbix) to the repository

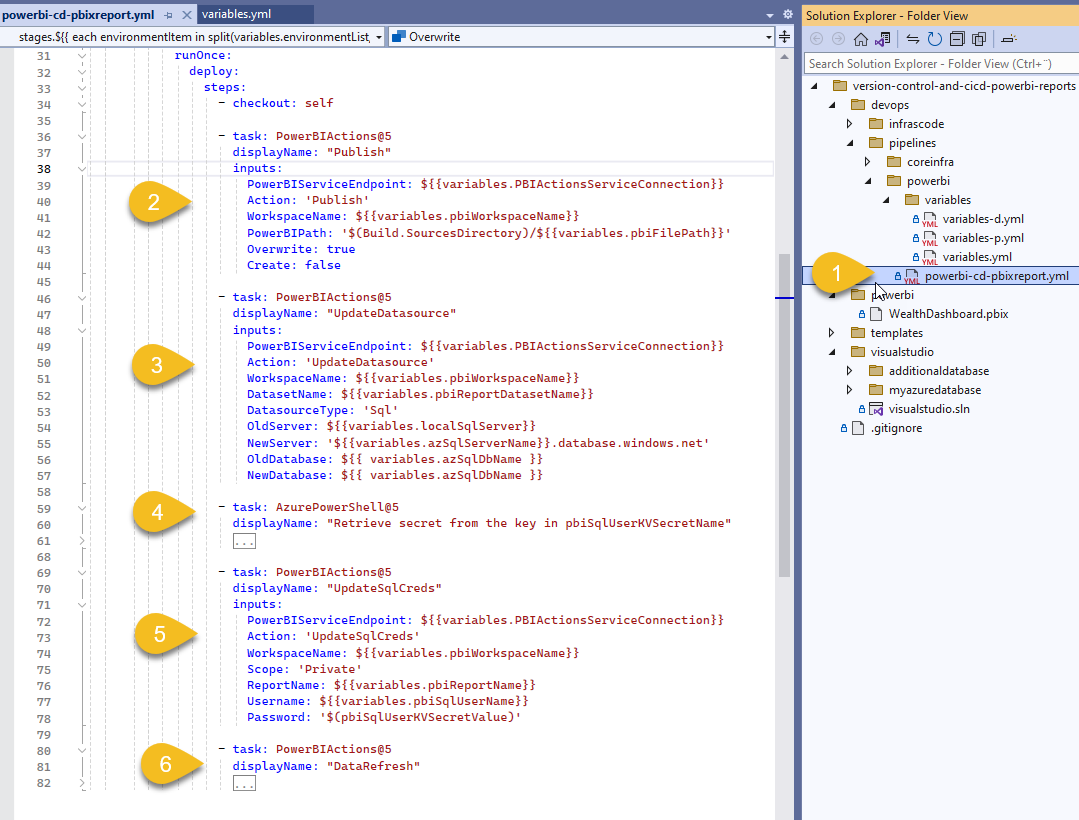

Deployment pipeline

Pipeline (1) uses the task provided by the Power BI Actions by Maik van der Gaag extension, each task is configured with a different Action.

First we Publish (2) the report to the workspace previously created, we then Update the Data Source (3) replacing the source configured locally in the (.pbix) file with the Server and Database defined in our environment variables, we then retrieve from the Key Vault the password dynamically generated for the SQL User (4) and we Update SQL Credentials with the fetched value (5) and finalize issuing a Data Refresh operation (6)

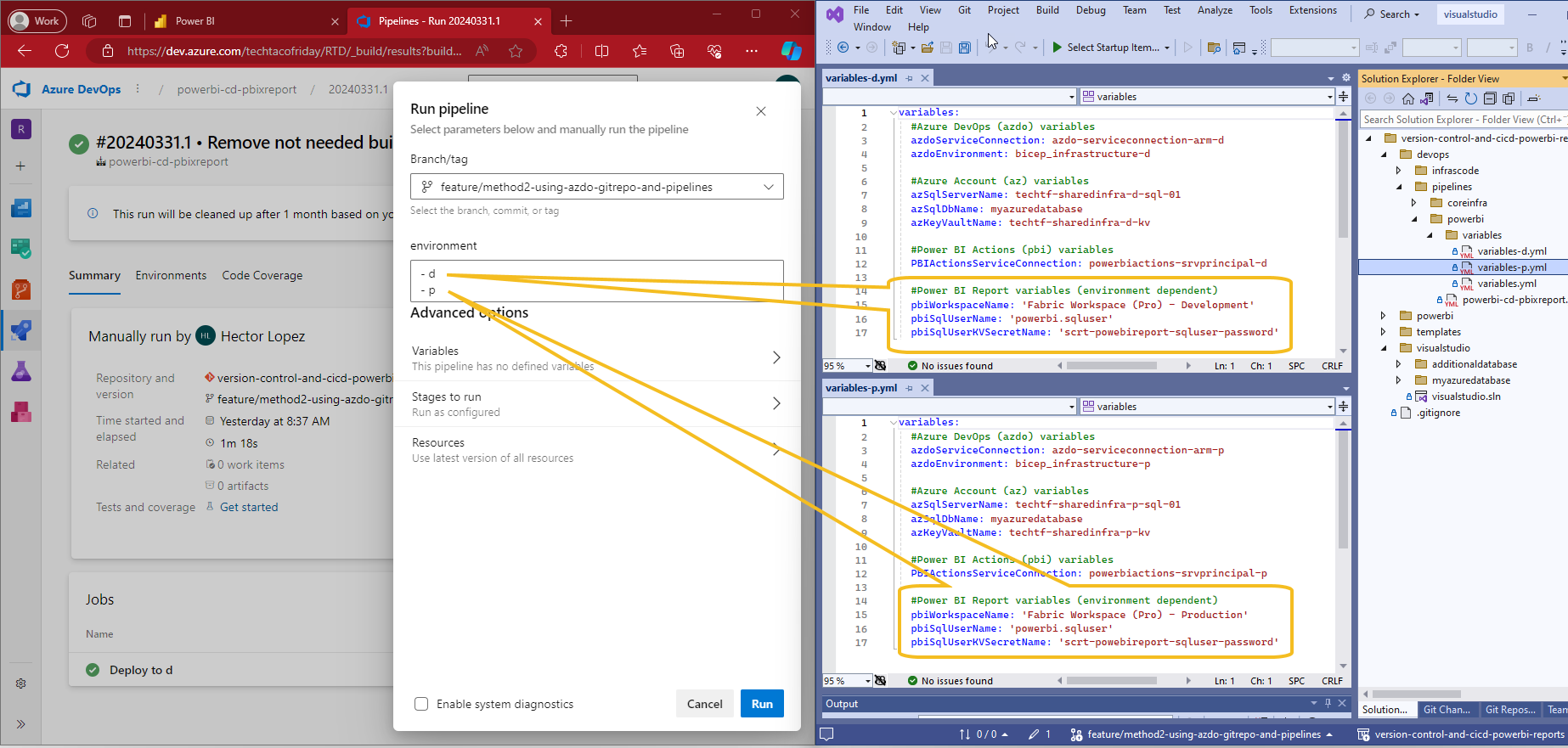

And there you go, you can add as many environments as you need by simply creating the Power BI workspaces and configuring corresponding values on the pipeline environment variable files! 😁👍

Conclusion

In conclusion, Method #2 utilizing DevOps Git Repos and Pipelines offers a powerful solution for Power BI Pro users seeking effective version control and deployment strategies. By leveraging Azure DevOps pipelines, even without Premium capacity-enabled workspaces, users can implement a seamless CI/CD cycle for their Power BI reports.

With version-controlled reports and automated deployment pipelines in place, Power BI users can confidently iterate on their projects, respond swiftly to changes, and maintain consistency across environments. Whether you're a solo analyst or part of a team, adopting Method #2 can revolutionize your Power BI workflow.

Call to action

I explained the code, now is your turn to ...